In today’s data-driven world, businesses generate more data than ever before. But raw data alone doesn’t deliver insights—it needs to be processed, cleaned, and transformed before it becomes useful. This is where data pipelines come in.

A data pipeline automates the movement and transformation of data from various sources to destinations like data warehouses, data lakes, or machine learning models. Whether you’re building a data analysis pipeline, a real-time streaming pipeline, or an AI-powered data system, efficiency is key.

In this guide, we’ll walk you through how to build a data pipeline step by step, explain the architecture, and share best practices, tools, and real-world examples. By the end, you’ll understand what makes pipelines not just work—but scale, adapt, and thrive in today’s evolving data ecosystems.

What Is a Data Pipeline?

A data pipeline is a series of steps that move data from one or more sources to a destination, such as a database, data warehouse, or analytics dashboard. These steps include data ingestion, processing, transformation, storage, and monitoring.

Think of it like a water pipeline raw water (data) flows through filters (transformation), gets cleaned (validation), and is stored in a tank (data warehouse) for use.

Data pipelines are the backbone of everything from data analysis pipelines to AI data pipelines, helping businesses make faster, data-backed decisions. They are used in marketing analytics, customer personalization, fraud detection, supply chain optimization, and more.

Why Do Efficient Data Pipelines Matter?

Efficiency in pipelines isn’t just about speed—it’s about resilience, scalability, and quality. Poorly built pipelines can result in:

- Data delays and inconsistent analytics

- Increased cloud bills due to resource waste

- Errors that disrupt critical decision-making

On the other hand, efficient pipelines empower:

- Real-time dashboards and alerts

- Faster ML model iterations

- Streamlined compliance and reporting

With the right architecture, you reduce manual intervention and increase trust in data across departments.

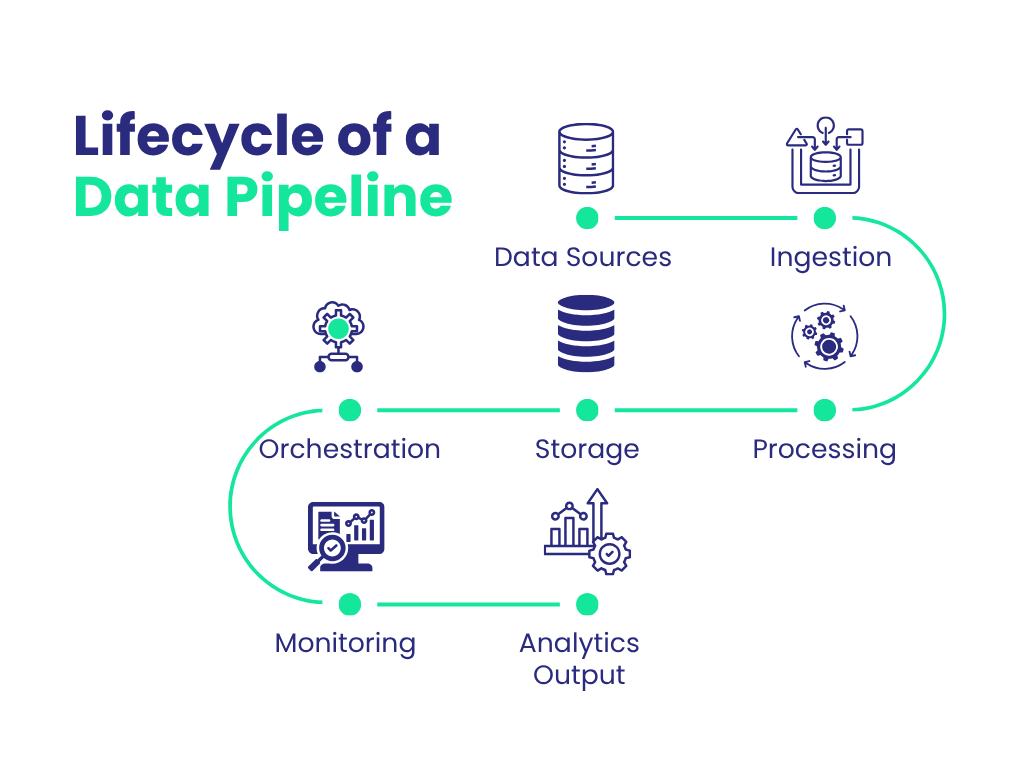

Understanding Data Pipeline Architecture

A typical data pipeline architecture includes:

- Data Sources: APIs, databases, IoT sensors, flat files

- Ingestion Layer: Tools like Kafka, AWS Glue, Azure Data Factory

- Processing Layer: ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform)

- Storage Layer: Data warehouses (Snowflake, BigQuery), lakes (S3, ADLS)

- Orchestration Layer: Apache Airflow, Dagster, Prefect

- Monitoring Layer: Logging, metrics, alerting systems

This modular architecture ensures pipelines are scalable, observable, and fault-tolerant, making them essential for both batch jobs and real-time AI pipelines.

How to Build a Data Pipeline (Step-by-Step)

Step 1: Define Your Use Case & Data Sources

Begin with the problem you’re solving. Is it churn prediction, dashboarding, or product recommendations? Choose relevant data sources like operational databases, marketing platforms, APIs, and file systems.

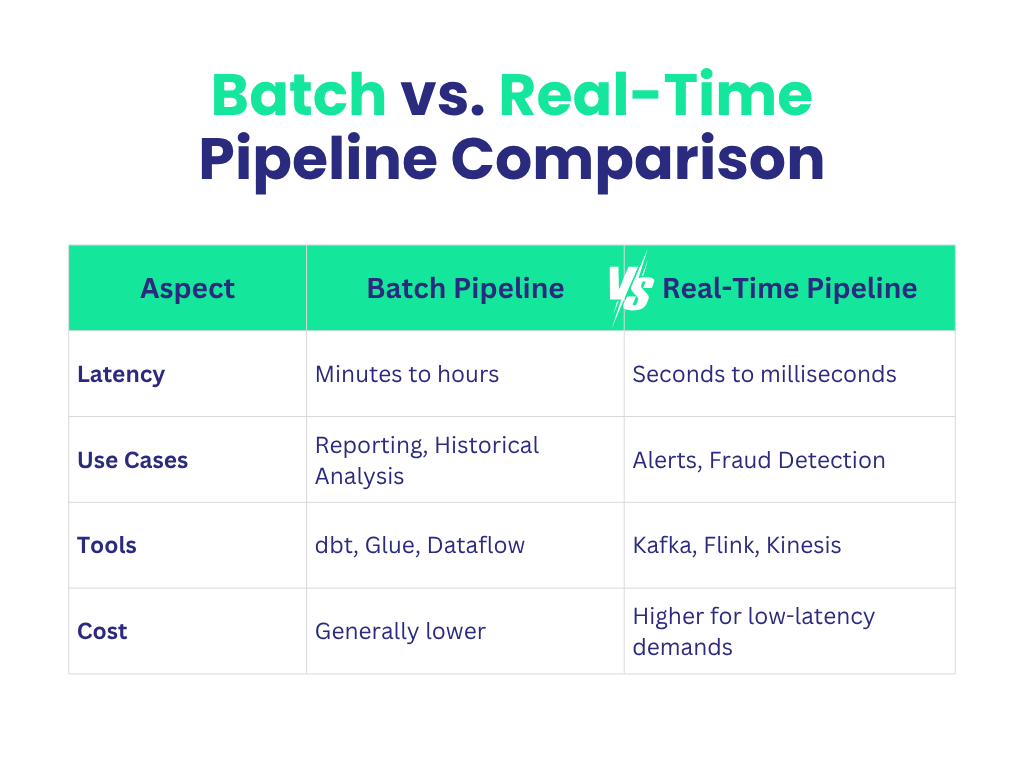

Step 2: Design the Pipeline Architecture

Choose between on-premise, cloud-native, or hybrid setup. Define frequency, latency tolerance, and SLAs. Select processing model—batch, micro-batch, or real-time.

Step 3: Set Up Data Ingestion

Tools:

- Batch: Azure Data Factory, AWS Glue, Google Cloud Dataflow

- Streaming: Apache Kafka, Google Pub/Sub, Amazon Kinesis

Ensure ingestion tools support fault-tolerance, retries, and data partitioning.

Step 4: Data Processing (ETL/ELT)

Transform raw data into structured formats:

- Deduplication, timestamp formatting

- Standardization and enrichment

- Feature engineering for machine learning

Tools: Apache Spark, dbt, Pandas, Beam

Step 5: Choose Your Storage Layer

- Data Lakes: Cost-effective, raw storage (S3, ADLS, GCS)

- Data Warehouses: Query-optimized (Snowflake, Redshift, BigQuery)

- Lakehouses: Combine best of both (Databricks Delta Lake)

Step 6: Orchestrate the Pipeline

Use workflow engines to define task dependencies and handle retries:

- Apache Airflow for DAG-based pipelines

- Prefect for Pythonic workflows

- Dagster for type-safe, testable pipelines

Step 7: Monitor & Manage the Pipeline

Observability is crucial:

- Monitoring: Tools like Prometheus, Grafana

- Data Validation: Great Expectations, Soda SQL

- Alerting: PagerDuty, Slack integrations

Step 8: Scale and Optimize

- Use container orchestration (Kubernetes)

- Employ serverless pipelines when applicable

- Parallelize stages and cache repeat operations

Data Pipeline Examples

Well-designed data pipelines can unlock speed, precision, and intelligence across industries. Below are real-world scenarios where pipelines power mission-critical outcomes:

1. Retail – Customer 360 & Personalization

A global e-commerce platform aggregates transactional, behavioral, and marketing data to build a unified view of the customer. Real-time pipelines ingest site activity using Kafka, enrich it via Spark, and update a customer profile store used for personalized recommendations and offers.

2. Finance – Real-Time Fraud Detection

A bank uses a streaming pipeline to analyze transactions in real time. Apache Flink detects anomalies based on ML scoring logic, alerting fraud teams instantly. The pipeline handles massive throughput with low latency using partitioned processing and in-memory features.

3. Healthcare – Clinical Data Integration

A hospital network ingests EHR data, medical imaging, and lab results into a centralized lakehouse architecture. Using dbt and Airflow, data is cleaned and modeled for downstream analytics dashboards, while ensuring HIPAA-compliant encryption and access control.

4. Manufacturing – Predictive Maintenance

Factories stream IoT sensor data from machinery into cloud data lakes. Azure Stream Analytics aggregates metrics like vibration and temperature. Predictive ML models flag equipment likely to fail, reducing unplanned downtime.

5. Media – Content Recommendation Systems

A streaming service uses batch pipelines to process viewing logs, user ratings, and device data. These are transformed nightly into training datasets for collaborative filtering models, driving next-day personalized content recommendations.

These examples highlight how pipelines evolve from simple ETL tasks into intelligent, event-driven architectures that power modern business needs.

Data Governance and Security in Pipelines

Data governance and security are essential for trustworthy data systems, especially when dealing with real-time and distributed data pipelines. Without a solid framework in place, businesses risk data leaks, quality issues, and non-compliance.

1. Data Lineage:

Track the flow and transformation of data using tools like OpenLineage or DataHub. This visibility supports troubleshooting and regulatory audits.

2. Access Control:

Implement RBAC or ABAC using tools such as Apache Ranger or AWS Lake Formation to restrict access based on user roles.

3. Encryption:

Encrypt data both at rest and in transit with TLS and cloud-native key management systems. Rotate keys regularly.

4. Quality Enforcement:

Use validation tools like Great Expectations to catch data quality issues early and enforce schema consistency.

5. Compliance:

Ensure adherence to regulations like GDPR and HIPAA by automating masking, retention, and consent checks.

6. Logging and Alerts:

Log data access and changes. Set alerts for suspicious activities using tools like Datadog or ELK stack.

Strong governance reduces risk and ensures your data remains secure, accurate, and compliant from source to insight.

Integrating Machine Learning in Data Pipelines

Machine learning workflows rely on data pipelines to automate the movement of raw data into actionable insights — but simply piping in data isn’t enough. Integrating ML requires a pipeline that not only prepares and delivers data but also aligns with model training, evaluation, and serving workflows.

1. Feature Engineering Pipelines:

Before training any model, you must ensure feature consistency. Real-time systems often suffer from “training-serving skew” where features during training differ from those at inference time. Feature stores like Feast solve this by serving the same logic to both stages.

2. Model Training Pipelines:

A scalable ML pipeline must handle data preprocessing, model versioning, hyperparameter tuning, and validation. Using tools like Kubeflow Pipelines, MLflow, or TFX, teams can standardize model development while tracking experiments and reproducibility.

3. Inference Pipelines:

Once deployed, models need to be served through batch jobs or real-time APIs. Pipelines must ensure predictions are fast, secure, and auditable. Tools like BentoML or KServe allow autoscaling and rollback options to avoid disruption.

4. Monitoring ML Pipelines:

Pipelines must detect issues like model drift, data skew, or feature null rates. This is where tools like WhyLabs, Evidently AI, or Fiddler help maintain trust by continuously auditing model performance.

Cost Optimization Strategies

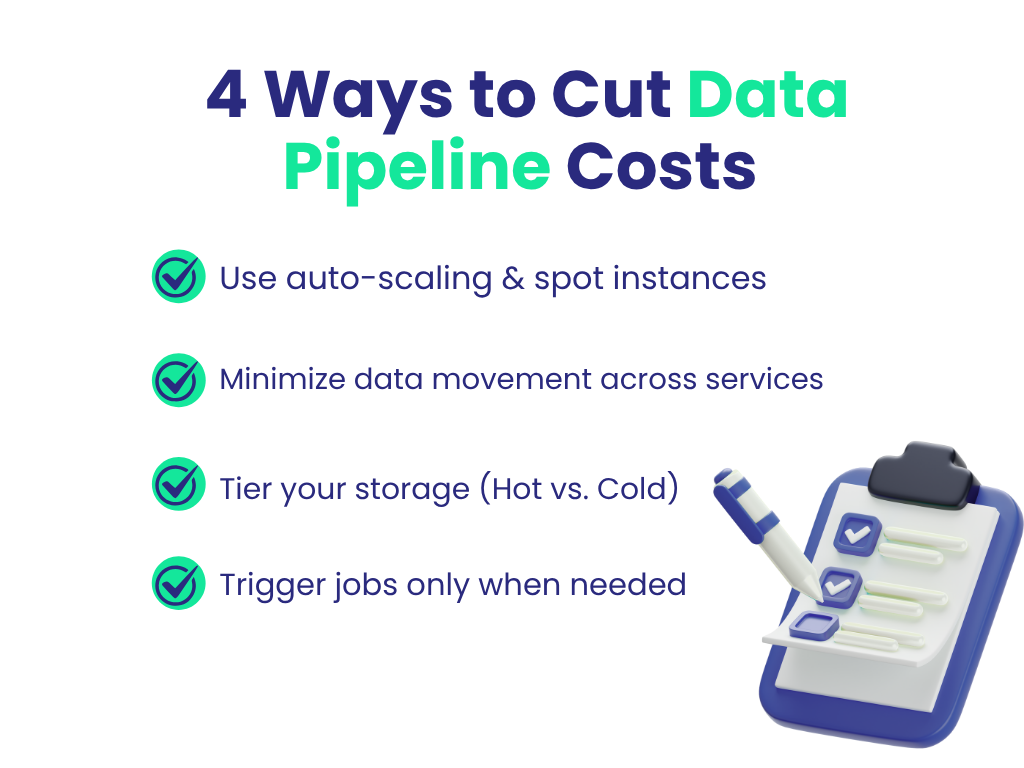

Cost-efficiency isn’t about cutting corners — it’s about building smart. Without a cost strategy, cloud-native pipelines can incur ballooning compute, storage, and transfer charges.

1. Optimize Compute Resources:

Use auto-scaling clusters, leverage preemptible/spot instances, and schedule jobs during off-peak hours. Containerization helps optimize memory/CPU allocation.

2. Reduce Redundant Data Movements:

Keep processing close to storage — avoid unnecessary staging layers. Use pushdown queries (like SQL in Snowflake or BigQuery) instead of exporting full datasets.

3. Choose the Right Storage Tier:

Hot, warm, and cold storage tiers exist for a reason. Use object storage (e.g., S3 Glacier) for archiving and columnar formats (Parquet, ORC) to compress query scans.

4. Apply Intelligent Scheduling:

Not every job needs to run hourly. Rethink scheduling — sometimes daily or event-driven execution suffices.

Pipeline Automation and CI/CD

CI/CD for data is complex — because you’re not only shipping code but also schemas, data quality rules, and dependencies.

1. Infrastructure as Code (IaC):

Manage pipeline configs (e.g., Airflow DAGs or dbt models) using Terraform or Pulumi. This ensures reproducibility across environments.

2. Versioning Data & Models:

Use Data Version Control (DVC) and MLflow to track how data changes affect model performance. Integrate them with CI tools to test for schema drift and model accuracy before deployment.

3. Automated Testing & Validation:

Incorporate unit tests for transformations, contract tests for schemas (using Great Expectations), and integration tests for full pipelines. Fail early.

4. GitOps for Deployment:

Trigger builds and deploy pipelines when code is committed. Use GitHub Actions or GitLab CI to run validation suites and publish to production environments automatically.

Observability and Reliability Engineering

Treat data pipelines like software systems — they must be monitored, versioned, and designed for failure.

1. Define SLOs and SLIs:

Track metrics like data freshness, row count anomalies, and error rates. These help stakeholders trust dashboards and alerts.

2. End-to-End Lineage:

Use tools like OpenLineage, Marquez, or DataHub to track how data flows between systems. This is critical for audits and impact analysis.

3. Chaos Engineering for Data:

Test what happens when upstream sources fail or deliver malformed data. Inject dummy failures to ensure your alerting and fallback logic works.

4. Proactive Monitoring:

Set up synthetic jobs to simulate usage (e.g., mock dashboards) and catch pipeline failures before users do.

Best Practices in Pipeline Development

Building a successful data pipeline is as much about engineering discipline as it is about tool selection. The following best practices ensure pipelines are scalable, maintainable, and aligned with business value:

1. Design for Modularity:

Break down complex workflows into reusable, self-contained components. This simplifies debugging, onboarding, and scaling as your data needs grow.

2. Embrace Idempotency and Checkpointing:

Make transformations idempotent so retries don’t duplicate results. Add checkpoints to enable restarts from failure points rather than starting from scratch.

3. Automate Everything:

Use CI/CD to automate deployment, testing, and validation. Integrate tools like Great Expectations, dbt tests, and schema checks to ensure data quality before promotion.

4. Use Configuration Over Hardcoding:

Store parameters, paths, and credentials in configuration files or secrets managers to simplify portability and environment switching.

5. Implement Robust Observability:

Include logs, metrics, and alerts at every stage of your pipeline. Invest in monitoring early—it saves hours later.

6. Optimize for Change and Evolution:

Data sources evolve. Plan for schema drift, version transformations, and test compatibility when upstream changes occur.

7. Track Lineage and Metadata:

Use lineage tools to trace how data changes across stages. This helps with audits, debugging, and understanding impact.

8. Build for Governance and Access Control:

Ensure each component supports encryption, authentication, and role-based access so that pipelines remain secure and compliant.

These principles help data teams move fast without breaking things, and turn pipelines into reliable business infrastructure.

Common Mistakes to Avoid

- Even experienced data teams encounter pitfalls that can undermine pipeline performance, reliability, and usability. Recognizing these early can help avoid costly rework and downtime:

- 1. Overengineering Too Early: Many teams build complex pipelines upfront without validating business needs or data stability. Start simple, iterate fast.

- 2. Neglecting Schema Evolution: Assuming data structures won’t change leads to breakage. Always include schema validation and version control.

- 3. Poor Error Handling and Retry Logic: Failing to design for transient failures (timeouts, dropped messages) can cause data loss. Pipelines should include retries, idempotency, and alerting.

- 4. Lack of Documentation and Metadata: Without clear docs, handoffs become difficult and debugging slows down. Tools like DataHub or even internal wikis help preserve knowledge.

- 5. Skipping Stakeholder Alignment: Building pipelines in isolation can result in delivering the wrong data or breaking downstream use cases. Regularly align with consumers and set clear SLAs.

- 6. Ignoring Data Quality Early On: Postponing validation until issues arise only increases technical debt. Integrate data quality checks into every stage from day one.

Avoiding these mistakes allows pipelines to mature gracefully, enabling faster experimentation, better collaboration, and more reliable analytics outcomes.

Conclusion

An efficient data pipeline is more than just a connection between systems—it’s a strategic asset that fuels analytics, decision-making, and automation.

From ingestion to orchestration, each layer contributes to resilience and performance. Whether you’re building a data science pipeline, an AI data pipeline, or just modernizing legacy ETL, following a structured, tool-aware approach is key.

Looking to future-proof your data strategy? Let Symufolk help you design, optimize, and manage high-performance pipelines that scale with your business.