What is Data Drift? Imagine spending months training a highly accurate machine learning (ML) model. It performs perfectly on all test datasets. You confidently deploy it to production, and for a while, it performs exactly as expected. But slowly, without warning, things begin to slip. The model’s predictions start deviating. Confidence scores drop. User satisfaction takes a hit. And yet, nothing in your system seems broken.

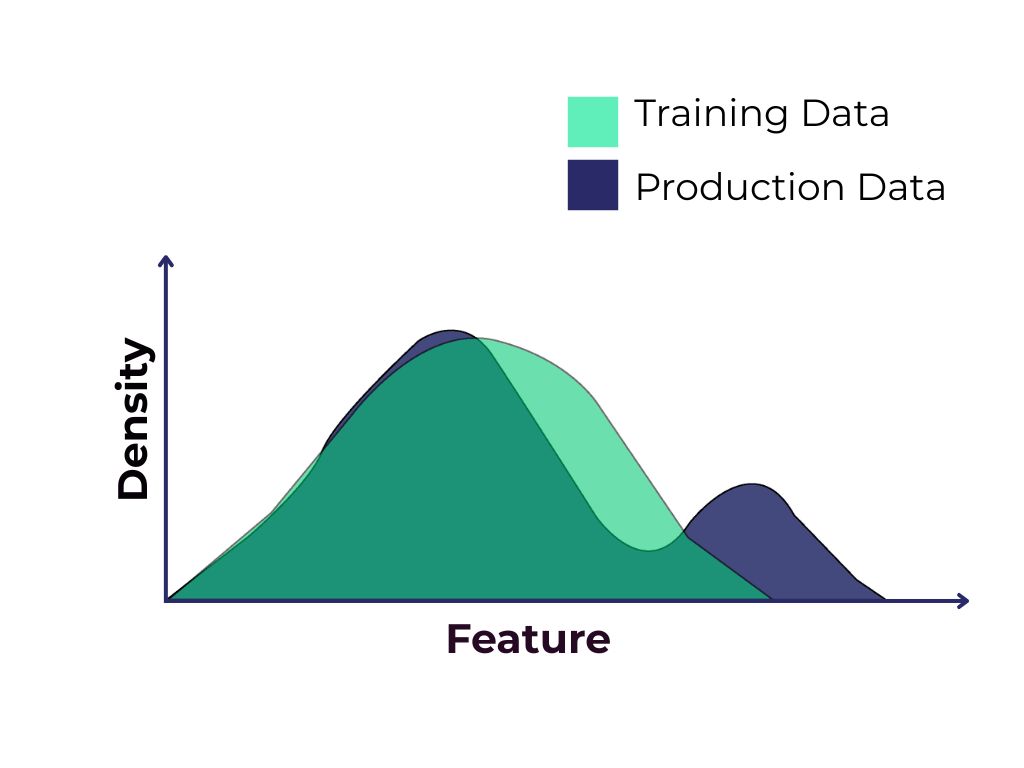

This is a classic case of data drift, a silent, creeping threat to any ML model. Data drift occurs when the statistical properties of input data change over time. The model, which was trained on past patterns, now faces new realities. It continues making decisions based on outdated assumptions, leading to poor predictions.

Unlike traditional software, ML models are only as good as the data they’ve seen. When that data changes—whether subtly or drastically—the model’s accuracy degrades, often unnoticed.

Is It a Silent Threat to ML Models? Yes, absolutely. Unlike bugs or system failures that cause visible errors or crashes, data drift undermines performance quietly. The system still runs. The predictions are still generated. But they’re increasingly incorrect, irrelevant, or harmful. This subtlety makes it even more dangerous. Without proper monitoring, you may continue to make business decisions based on faulty insights.

Drift Lifecycle: When and How It Shows Up

- Training Phase: The model is trained on historical data, capturing relationships and statistical distributions specific to that time.

- Deployment: The model is pushed into production to make predictions using new, incoming data.

- Evolution of Data: Real-world data starts evolving due to changes in user behavior, external events, or market dynamics.

- Model Misalignment: The model continues using outdated logic, leading to inaccurate outcomes.

- Degraded Performance: Prediction errors increase, but there are no immediate signs unless monitoring is in place.

- Detection or Failure: If monitored, drift can be caught and corrected. If not, trust is lost and business operations are negatively impacted.

ML is not a “train once, deploy forever” system—it needs ongoing alignment with changing realities.

Data Drift vs. Related Concepts

Understanding how data drift differs from related types of drift is crucial to identifying the correct mitigation approach.

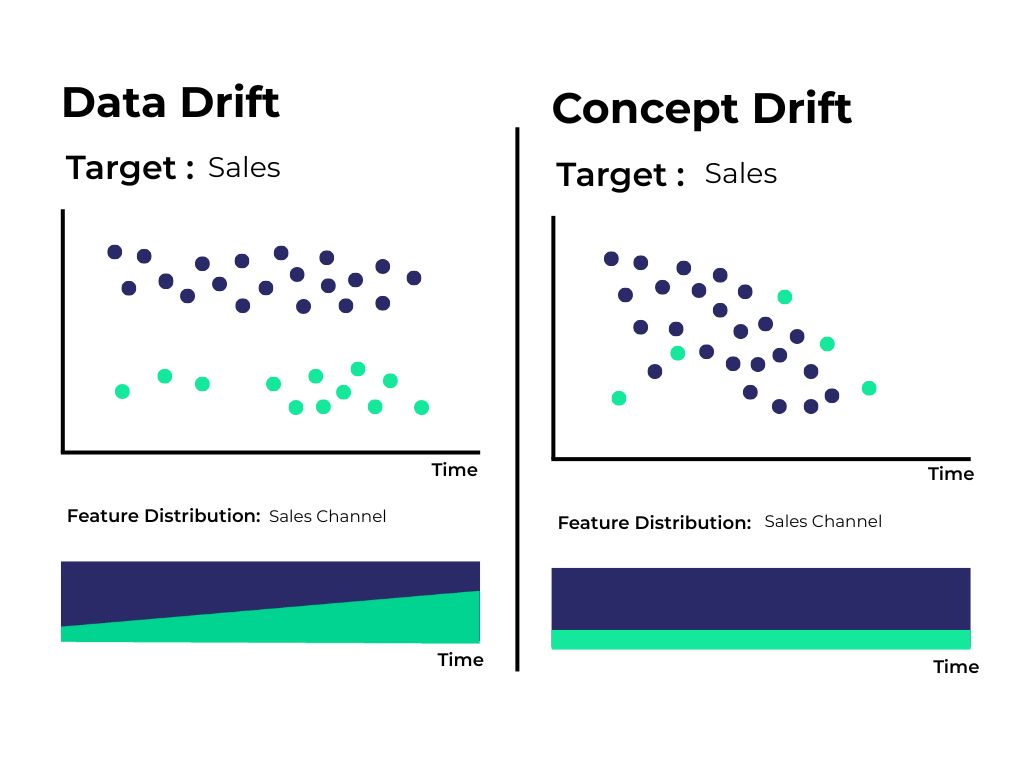

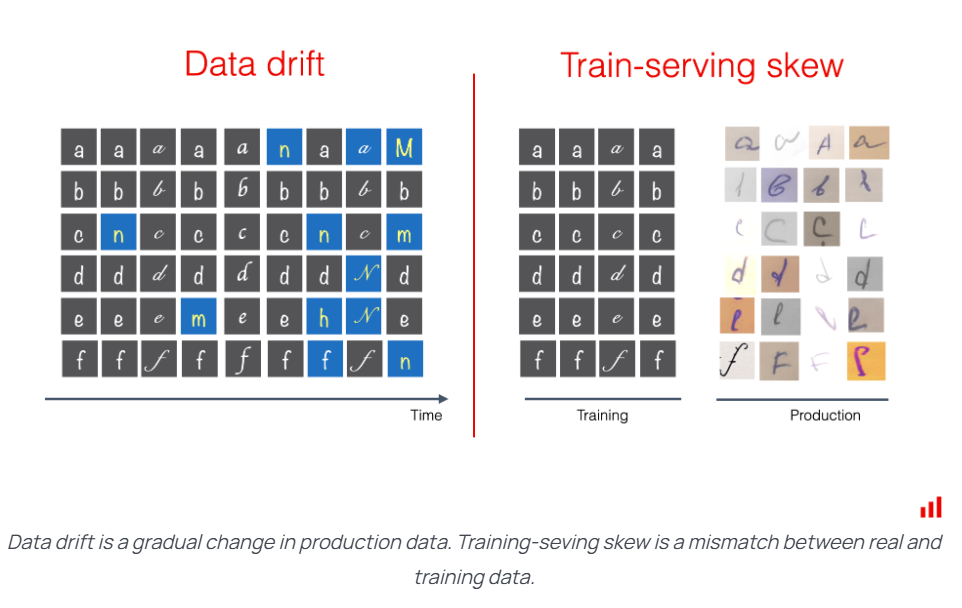

Data Drift vs. Concept Drift While data drift concerns the input features, concept drift relates to the target variable. In data drift, the features change, but the relationship to the output remains intact. For example, if your user base shifts from millennials to Gen Z, your model may start seeing new behavioral patterns even though the target output—such as a product recommendation—remains the same.

Concept drift, on the other hand, refers to a change in the fundamental relationship between inputs and outputs. What used to be a strong predictor is no longer valid. This may occur due to policy changes, shifting market dynamics, or changes in human behavior. Concept drift is harder to detect, often requiring retraining and redefining features altogether.

Example: In a credit scoring model, income might be a strong predictor initially. After new regulations emphasizing credit history, the model now needs to weigh income less and focus more on past defaults. That’s concept drift.

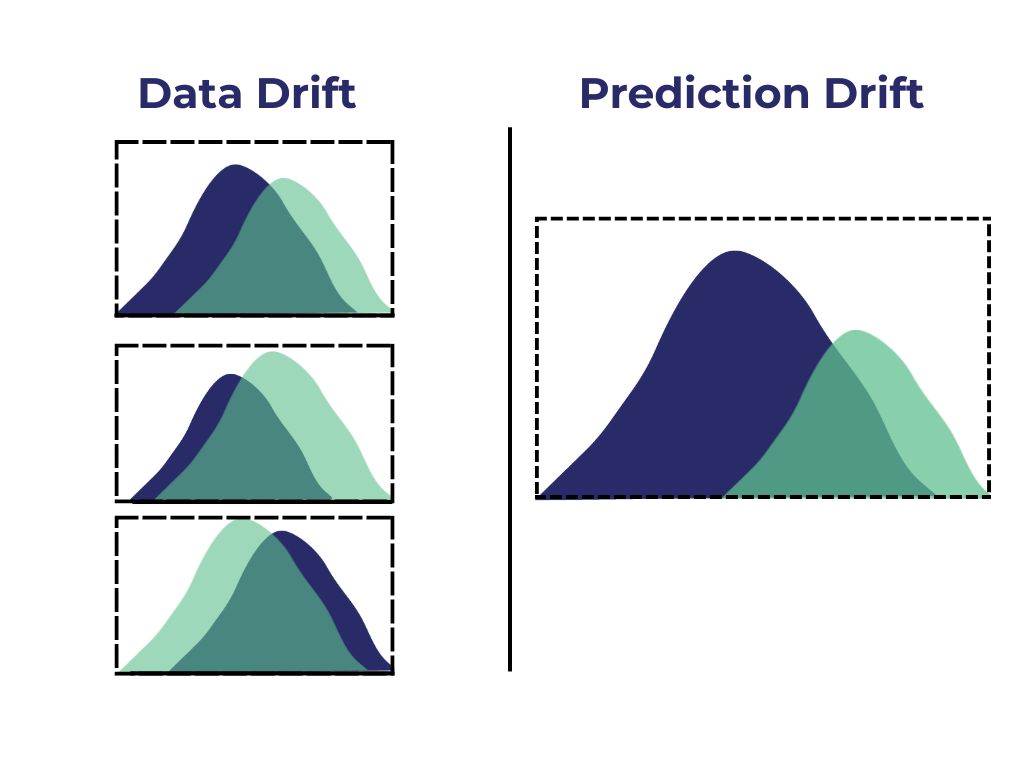

Data Drift vs. Prediction Drift Prediction drift involves a shift in the model’s outputs. This could result from either data or concept drift. If the prediction probabilities for certain classes begin to shift dramatically without changes to the model or training data, it’s a sign that something has changed in the input or relationship between inputs and outputs.

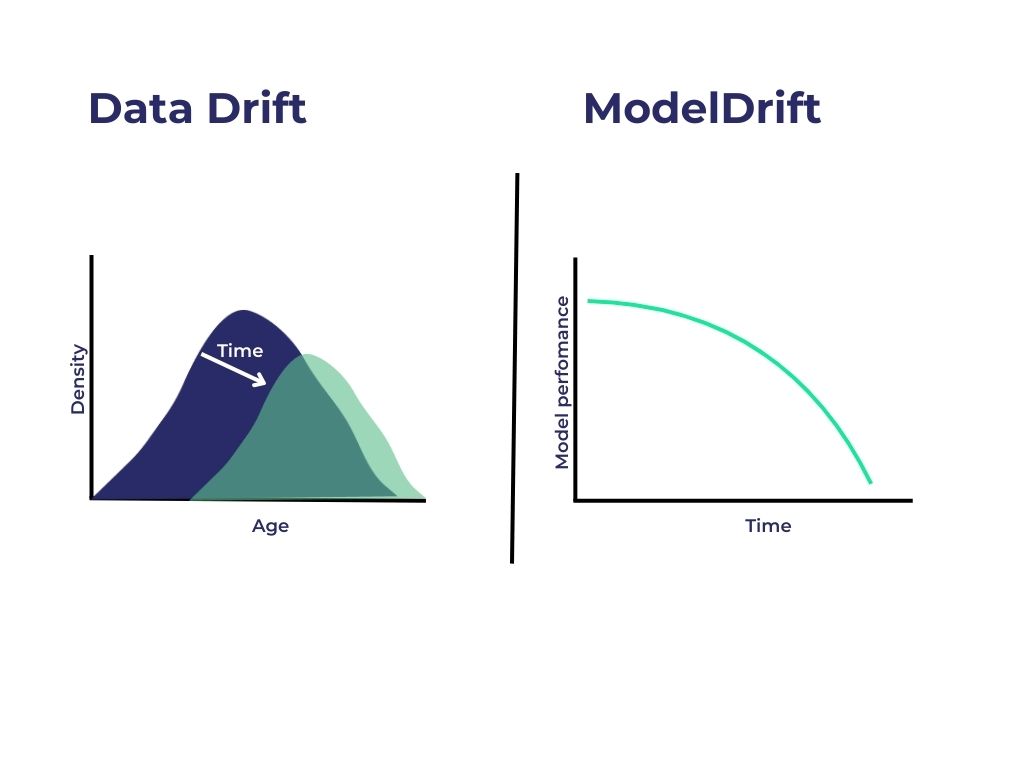

Data Drift vs. Model Drift Model drift encompasses both data and concept drift but also includes other issues like stale models, poor retraining strategies, or feature leakage. It refers to the overall decline in a model’s performance over time, no matter the cause.

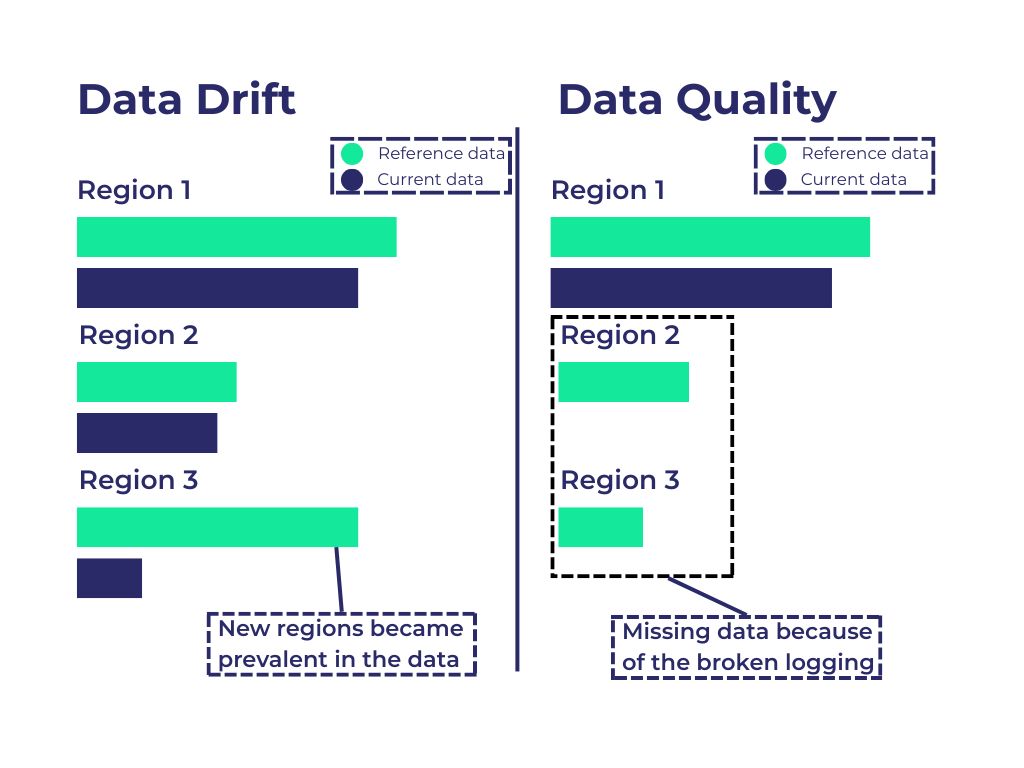

Data Drift vs. Data Quality Issues Data quality problems like null values, incorrect formats, or duplicate records degrade model performance but are usually obvious and easy to fix. Data drift, however, arises from legitimate, clean data that has simply evolved. It is more subtle and harder to catch.

Data Drift vs. Training-Serving Skew This happens when the data preprocessing or feature engineering during training differs from what is used during inference. While this is not true drift, it creates a similar effect by introducing discrepancies between training and production environments.

Image by Evidently AI

How It Affects Machine Learning Models

A model suffering from undetected data drift is like a map that no longer matches the terrain. You’re navigating with outdated information, which can be catastrophic in data-driven environments.

Key Impacts:

- Loss of Accuracy: Misclassifications and poor predictions become common. Business metrics that rely on AI begin to slip.

- Wasted Budgets: Marketing campaigns may target the wrong audience. Loan approvals may go to high-risk individuals. Resources are misallocated.

- Regulatory Trouble: In sectors like finance and healthcare, regulatory compliance requires models to make fair and explainable decisions. Data drift can cause these models to violate standards unknowingly.

- Loss of Trust: End-users, clients, and internal stakeholders lose faith in AI recommendations.

Long-Term Effects:

- Decreased model lifespan

- Higher maintenance costs

- Increased technical debt

- Decision paralysis or decision fatigue due to inconsistent results

The business cost of ignoring data drift grows exponentially the longer it’s undetected.

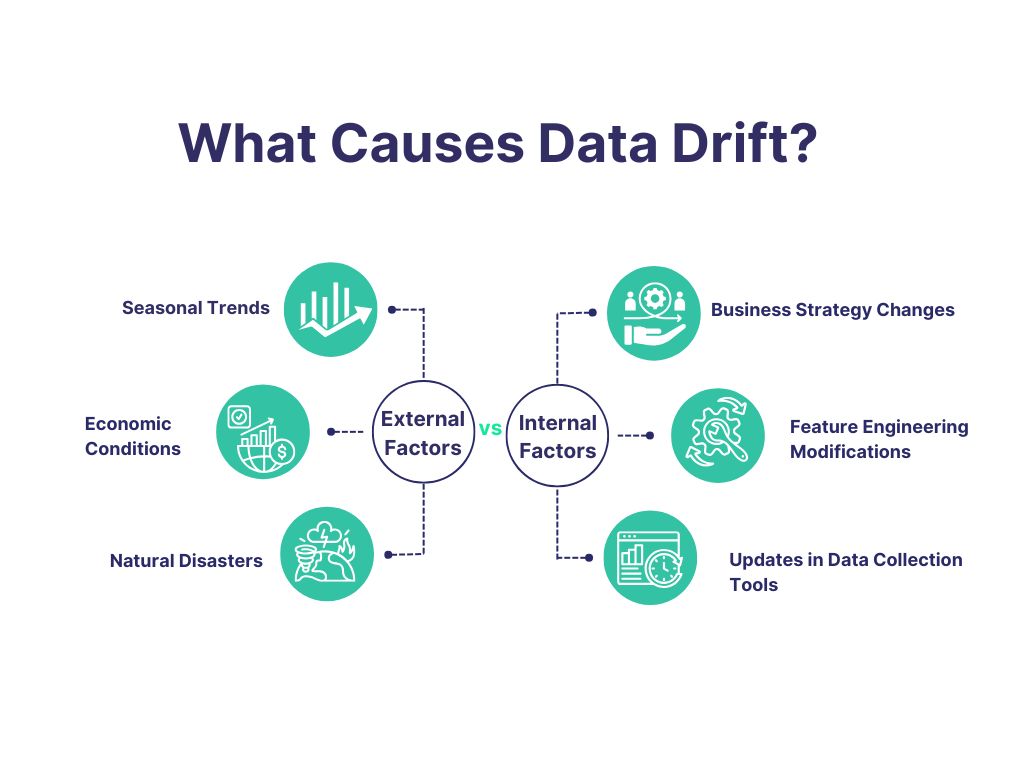

What Causes Data Drift?

Data drift can be triggered by a wide range of internal and external changes:

External Factors

- Seasonal Trends: Holidays, weather, school schedules, etc.

- Economic Conditions: Inflation, interest rate changes, unemployment rates.

- Pandemics or Natural Disasters: Sudden, large-scale disruptions that change behavior.

- Competitor Moves: New product launches, pricing changes.

Internal Factors

- Business Strategy Changes: New product lines or target demographics.

- Feature Engineering Modifications: Changing how a feature is calculated.

- Updates in Data Collection Tools: New APIs, web forms, sensors, etc.

Any of these shifts can introduce patterns that are vastly different from the data the model was trained on.

Real-World Examples

E-commerce Example:

An ML model predicts product demand based on browsing patterns and past purchases. A sudden change in supply chain availability makes certain products out of stock, altering user behavior. This changes the distribution of features like “time spent on product pages” or “cart abandonment,” causing the model to mispredict demand.

Healthcare Example:

A hospital uses a model to triage emergency room patients. A new strain of illness emerges, changing the symptom profile. The model, unaware of this new pattern, assigns incorrect risk scores, leading to potentially dangerous outcomes.

Banking Example:

A credit risk model performs well until a fintech app starts attracting a younger, gig-economy audience. Their spending and earning patterns differ from the traditional salaried users the model was trained on, causing increased loan defaults.

These examples highlight the real-world consequences of data drift—and the need for vigilant monitoring.

How to Detect Data Drift

Detecting data drift is crucial to preventing model decay. Without early warning systems in place, organizations may unknowingly operate with degraded models, leading to faulty decision-making and lost business opportunities.

Why Detection Matters

Even if your model appears to function normally, drift can gradually erode accuracy. This is especially dangerous in high-stakes environments like finance, healthcare, and autonomous systems where prediction errors can have legal or life-threatening implications.

Effective detection allows teams to:

- Catch issues before they snowball

- Maintain consistent model accuracy

- Avoid resource waste

- Improve stakeholder trust in AI systems

Statistical Methods for Drift Detection

There are several statistical tests and methods used to monitor for drift:

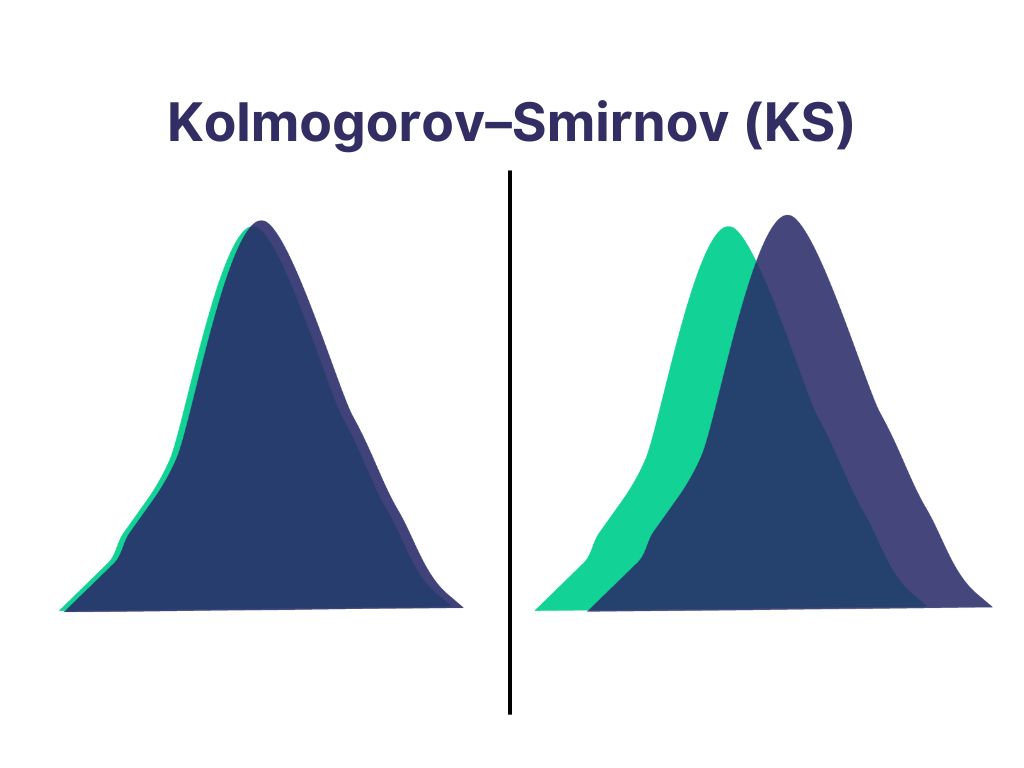

1. Kolmogorov–Smirnov (KS) Test

A non-parametric test that compares the distributions of two datasets. Ideal for detecting changes in numeric features.

- Use Case: Track a continuous feature like “user session time.”

- How It Works: If the KS statistic exceeds a threshold, drift is suspected.

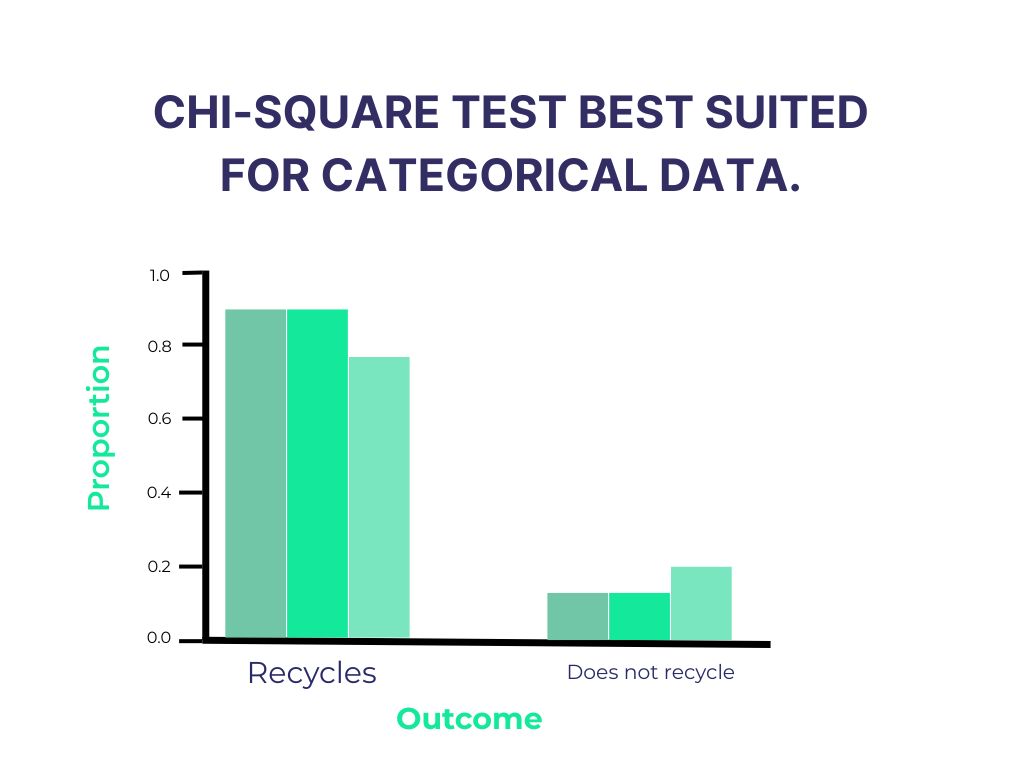

2. Chi-Square Test Best suited for categorical data.

- Use Case: Monitor shifts in the frequency of customer categories, e.g., “Gold,” “Silver,” “Bronze.”

- Result: Low p-values signal significant drift.

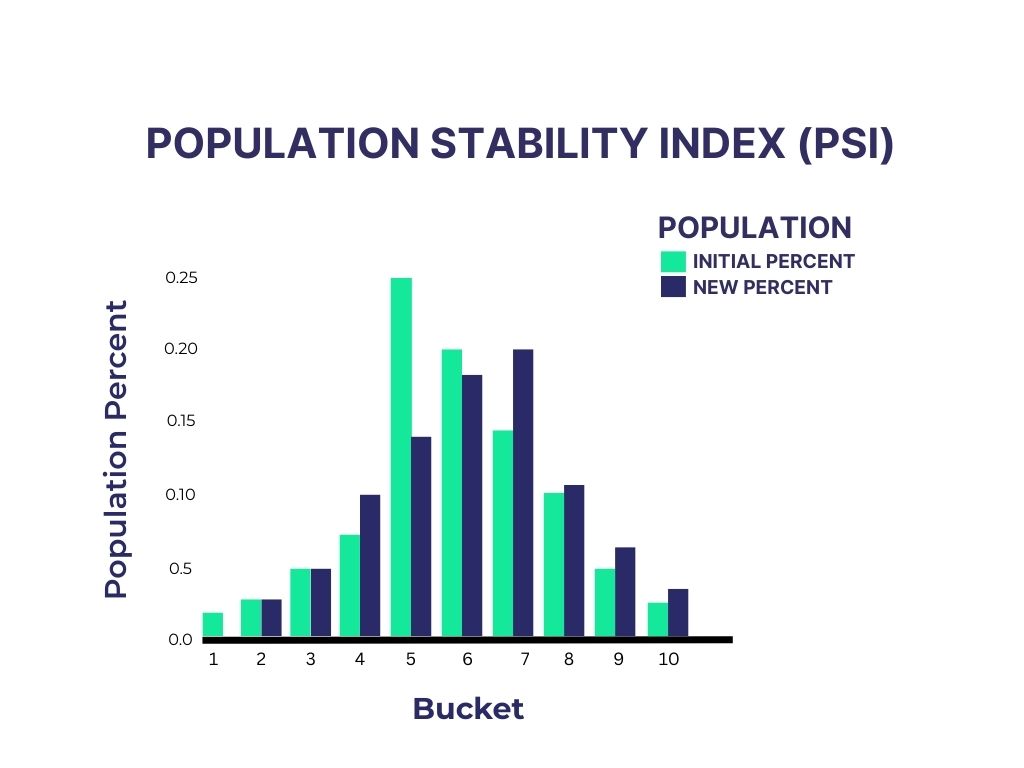

3. Population Stability Index (PSI)

A popular method to measure the stability of a variable over time.

- Use Case: Common in credit risk and banking models.

- Rule of Thumb: PSI > 0.25 suggests strong drift.

4. Jensen–Shannon Distance

A symmetric measure that compares probability distributions. More stable than KL divergence.

- Application: Monitoring model outputs and prediction class probabilities.

5. Earth Mover’s Distance (EMD) Measures

how much distribution mass needs to be moved to make one dataset resemble another. Great for visual drift tracking.

Data Monitoring Approaches

- Baseline vs Current Monitoring This involves comparing current data distributions to a saved baseline (typically the training set).

- Pro: Easy to implement

- Con: Doesn’t account for gradual evolution

Rolling Window Monitoring

Here, data distributions are compared across sliding time windows.

- Useful for detecting slow drift

- Requires a more complex implementation

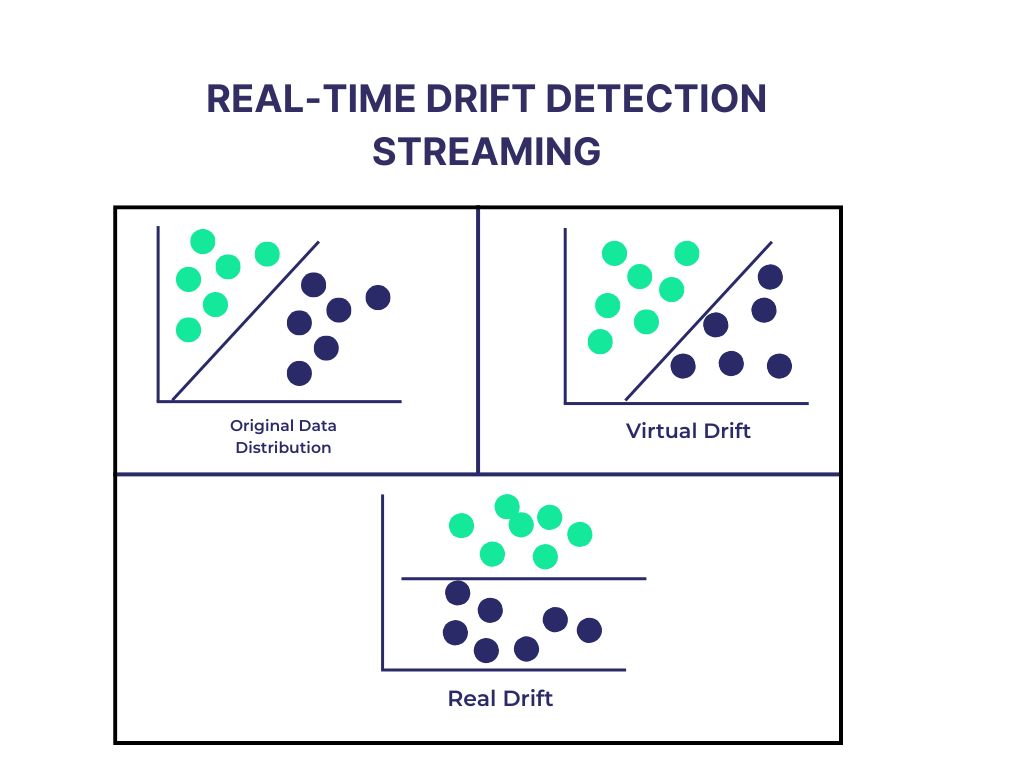

Real-Time Drift Detection

Streaming frameworks allow for live monitoring. Tools like River and Alibi support this.

- Best for critical systems that require immediate reaction

- Can be computationally expensive

Tools and Platforms for Detection

Choosing the right tool depends on your tech stack, data volume, and real-time needs.

| Tool | Type | Use Case |

| Evidently AI | Open-source | Create drift dashboards and reports |

| River | Open-source | Stream data monitoring and online learning |

| Alibi Detect | Open-source | Advanced statistical drift detectors |

| Fiddler AI | Commercial | Model observability and interpretability |

| WhyLabs | Commercial | Real-time ML monitoring at scale |

Each of these tools allows for integration into MLOps pipelines and can help alert teams to potential drift events before performance suffers.

Visualization for Drift Detection

It’s easier to act on what you can see. Visualization tools help stakeholders understand drift:

- Histograms of feature distributions

- Time series plots showing drift over time

- PSI dashboards

- Color-coded risk scores for features

Effective visualization is the bridge between technical detection and executive understanding.

How to Handle Data Drift

Once data drift is detected, the next step is deciding how to respond. Handling drift effectively can restore model performance, maintain business reliability, and reduce the risk of poor decision-making.

There are multiple ways to handle drift depending on its cause, frequency, and impact. A one-size-fits-all approach doesn’t work—what’s needed is a tailored response strategy based on the model’s environment and the nature of the drift.

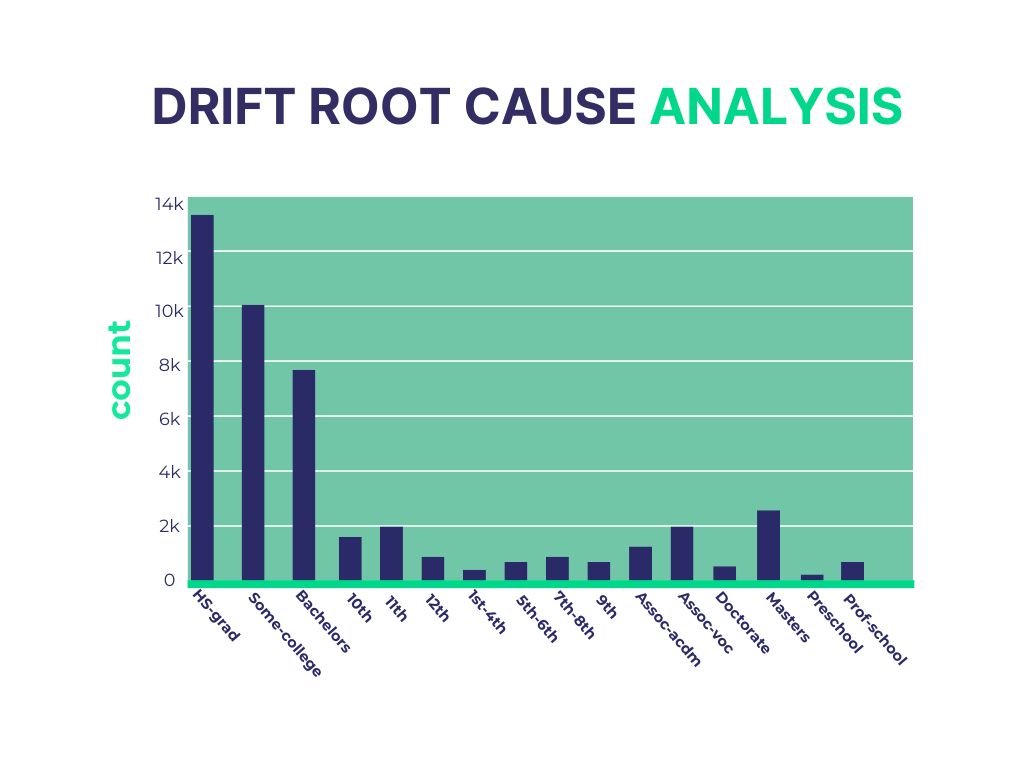

1. Drift Root Cause Analysis

Before jumping into fixes, it’s essential to analyze the source of the drift:

- Which feature(s) changed the most? Use tools like SHAP or drift attribution tools to identify key contributors.

- When did the change begin? Segment data by date ranges or time intervals.

- Is the shift global or local? Sometimes drift only affects certain demographics or geographies.

- Is the target variable stable? If not, it might be a concept drift, not just data drift.

Understanding the source gives clarity on the type of intervention needed.

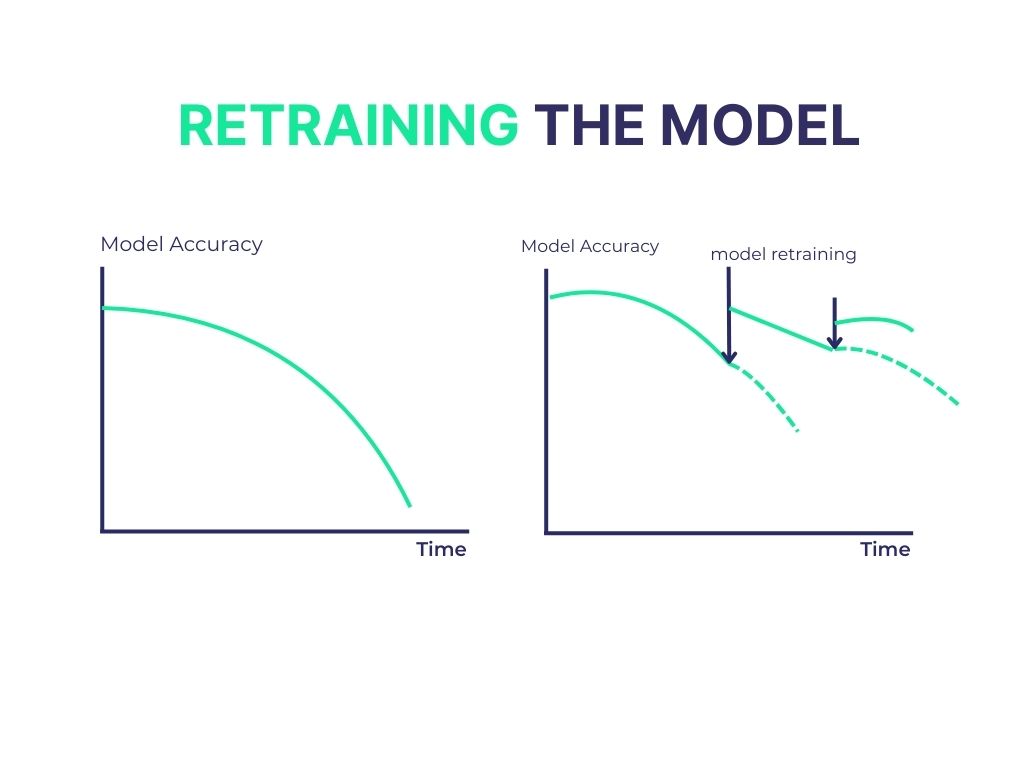

2. Retraining the Model

Retraining is the most common and effective way to combat drift, especially if the model’s structure is still valid.

- Collect New Data: Include recent data that reflects the new input distribution.

- Sliding Window Strategy: Use data from the most recent ‘X’ days/weeks to keep the model relevant.

- Rebalancing Datasets: Address shifts in class imbalance or target frequencies.

- Validation on Live Data: Always test retrained models on the most recent, real-world data before deployment.

In some cases, retraining alone isn’t enough—additional changes to the model architecture or features may be required.

3. Feature Engineering Revisions

Sometimes the way you process features contributes to drift. For example, a feature that bins users by age may become less effective if user demographics shift.

- Update binning strategies (e.g., from 10-year bins to 5-year bins)

- Remove obsolete features or interactions

- Add new features that explain emerging patterns

Use feature importance scores and correlation analysis to revise engineering logic meaningfully.

4. Change Model Architecture

If retraining and feature adjustments don’t solve the issue, you may need a more flexible or adaptive model.

- Switch from static models to ensemble methods that are more robust to variation.

- Use online learning models like those supported by River, which learn incrementally with each new data point.

- Introduce temporal modeling for time-sensitive problems (e.g., LSTM for sequential data).

This is more resource-intensive but may be necessary for dynamic environments.

5. Version Control and Shadow Models

Another strategy is to run the old model alongside a new one (shadow model).

- Use A/B testing to evaluate performance in real-world settings.

- Gradually switch traffic from the old model to the new one.

- Compare predictions to detect if retraining has resolved the issue.

Shadow modeling is particularly helpful when business decisions are high-stakes and mistakes can be costly.

6. Automating the Handling Pipeline

If your business sees frequent drift, it’s smart to automate your response:

- Build MLOps pipelines that monitor performance, trigger retraining jobs, and validate new models.

- Schedule weekly or monthly performance audits.

- Integrate CI/CD workflows that auto-deploy new models after validation.

Frameworks like MLflow, Tecton, and Kubeflow can help orchestrate this end-to-end.

7. Collaborate with Domain Experts

Often, understanding why data is drifting requires human expertise. Work with product managers, sales teams, or field operators to interpret what’s changing in the real world.

For example:

- Is there a new product being promoted?

- Has a marketing campaign influenced user behavior?

- Are there seasonal or cultural events influencing data?

This collaboration ensures model decisions remain grounded in context—not just stats.

Summary of Handling Techniques

| Strategy | Best For | Tools/Examples |

| Retraining | Frequent or gradual drift | Scikit-learn, XGBoost, TensorFlow |

| Online Learning | Real-time systems | River, Vowpal Wabbit |

| Feature Update | Emerging trends | SHAP, EDA tools |

| Model Change | Complex drift | AutoML, Deep Learning models |

| Shadow Testing | High-risk models | Custom pipelines, A/B test frameworks |

| Automation | Large-scale systems | MLflow, Airflow, Tecton |

Each of these strategies addresses specific challenges—often, the best results come from combining multiple techniques.

How to Mitigate Data Drift

While handling data drift is essential once it’s detected, an even better strategy is to proactively mitigate it before it causes serious issues. Mitigation involves designing systems and processes that are resilient, adaptable, and equipped to anticipate change.

Here are the most effective mitigation approaches:

1. Design Robust Data Pipelines

One of the first lines of defense is building pipelines that are accurate, consistent, and auditable.

- Standardize feature engineering: Ensure features are constructed the same way in both training and production.

- Validate preprocessing scripts: Keep logic identical in all environments to avoid training-serving skew.

- Log every transformation step: Maintain traceability for audit and debugging.

Example: If you scale input features during training, make sure the exact scaling logic is applied in production using version-controlled code.

2. Implement Adaptive Learning Strategies

Traditional models are retrained periodically. Adaptive models, on the other hand, learn continuously.

- Online Learning Models: Continuously adjust to new patterns in real-time.

- Incremental Retraining: Instead of waiting weeks or months, retrain your model on recent data daily or weekly.

Tools like River, Vowpal Wabbit, and Scikit-Multiflow support these strategies.

3. Integrate Feedback Loops

Data drift often becomes apparent after model predictions are proven wrong. Closing the loop on this feedback is critical.

- Collect Delayed Labels: For example, in fraud detection, you may not know if a transaction was truly fraudulent until days later.

- Incorporate Outcome Feedback: Use these delayed labels to update models accordingly.

- Human-in-the-loop: For critical cases, let humans verify predictions and provide training feedback.

Feedback loops increase model awareness of changing patterns, ensuring continual improvement.

4. Monitor Data and Model in Tandem

It’s not enough to monitor just the input features—you need to track:

- Input distributions (for data drift)

- Output probabilities and predictions (for prediction drift)

- Relationship between inputs and outputs (for concept drift)

A comprehensive monitoring stack combines:

- Summary statistics and visualizations

- Real-time alerts for distribution shifts

- Performance benchmarking on current vs past data

Tools like WhyLabs, Fiddler, and Evidently AI are excellent choices for integrated drift monitoring.

5. Regularly Update Training Datasets

Your training data needs to reflect real-world data. Stale datasets are a major cause of drift.

- Use sliding windows to keep datasets fresh

- Remove outdated or low-variance data

- Continuously add recent examples that reflect current trends

Example: A news recommendation engine should constantly add recent articles and user behaviors to its dataset, or else it risks recommending irrelevant content.

6. Use Ensemble and Meta-Learning Models

Robust models can mitigate drift better by combining multiple learning methods.

- Ensemble Methods: Blend predictions from multiple models to buffer against one model’s weaknesses.

- Meta-learning Models: Learn how to learn—these models adjust not just parameters, but learning strategies.

These techniques provide extra stability in volatile environments.

7. Establish a Governance Framework

Organizations with strong AI governance practices are better prepared to identify, monitor, and mitigate drift.

- Create monitoring policies: Set drift detection frequency, thresholds, and alerting procedures.

- Assign responsibility: Designate model owners who are accountable for performance.

- Audit regularly: Document how drift was handled and decisions were made.

This is especially critical in regulated industries.

Summary Table: Mitigation Techniques

| Mitigation Approach | Purpose | Tools/Examples |

| Robust Pipelines | Prevent processing errors | Airflow, dbt, Dataform |

| Adaptive Learning | Continuous model updates | River, Vowpal Wabbit |

| Feedback Loops | Learn from real-world outcomes | Custom retraining APIs |

| Full-Stack Monitoring | End-to-end drift detection | WhyLabs, Fiddler, Evidently |

| Dataset Refreshing | Keep training relevant | Snowflake, BigQuery, custom ETL |

| Ensemble Models | Reduce volatility | LightGBM, CatBoost, XGBoost |

| Governance Policies | Maintain control and accountability | Model cards, data sheets |

Mitigating drift is not just about tools—it’s about mindset. Teams that assume change is inevitable are more successful in adapting AI systems that remain useful and trustworthy.

Best Practices for Monitoring and Managing Drift

The best way to ensure long-term model success is to integrate drift detection and response into your ML lifecycle from the start. Organizations that adopt these best practices are more likely to catch issues early, respond quickly, and keep their models performing reliably in production.

1. Monitor Continuously, Not Occasionally

Machine learning systems are dynamic by nature. Therefore, one-time checks or quarterly audits are not enough. Set up monitoring processes that run continuously and flag changes as they occur.

- Use scheduled batch jobs for nightly checks.

- Deploy streaming monitors for real-time systems.

- Include drift metrics in your CI/CD pipelines.

2. Start Simple, Then Scale

It’s easy to over-engineer your first drift detection system. Begin with basic statistical summaries and dashboards.

- Monitor mean, standard deviation, and missing value counts.

- Track frequency distributions for categorical features.

- Expand to distance metrics and multivariate analysis later.

Tools like Evidently AI can help generate simple dashboards with minimal setup.

3. Segment Monitoring by Time, Geography, and Demographics

Drift doesn’t always occur across the entire dataset. It might only affect specific user groups or regions.

- Monitor separately for each customer tier (e.g., new vs. returning users).

- Track behavior by region, especially if your business is global.

- Analyze drift patterns across product categories or usage contexts.

Segmentation can uncover local drifts that would be hidden in overall metrics.

4. Set Thresholds and Automate Alerts

Rather than waiting for manual reviews, establish clear drift thresholds and set up automated alerts when those are crossed.

- For PSI: trigger alerts at >0.1 (moderate drift) and >0.25 (significant drift).

- Use p-value thresholds (e.g., <0.05) for hypothesis-based tests like Chi-square.

- Integrate with Slack, email, or observability dashboards for instant notifications.

Automation ensures nothing slips through the cracks.

5. Incorporate Explainability with Monitoring

When drift is detected, it’s vital to understand what changed and why.

- Use SHAP, LIME, or other explainability tools to observe shifts in feature importance.

- Combine drift reports with model explanation dashboards.

- Explain drift findings clearly to non-technical stakeholders.

This bridges the gap between technical teams and business leaders.

6. Maintain Version Control for Models and Data

Keep detailed records of all versions of your training datasets, models, and feature engineering logic.

- Use Git or DVC to version control data transformations.

- Archive past model versions with metadata (date, accuracy, drift metrics).

- Maintain a registry of live vs test models.

Versioning helps rollback quickly and understand model history.

7. Review and Retrain Proactively

Don’t wait until model accuracy drops significantly. Build a proactive retraining schedule.

- Weekly or monthly retraining based on data volume and sensitivity.

- Always validate new models against recent data.

- Document retraining decisions and outcomes.

Use scheduled Airflow DAGs or CI/CD retraining pipelines for automation.

8. Collaborate Across Teams

Drift detection is not just a data science problem. It requires input from:

- Product managers to identify market changes

- Sales and marketing to explain customer behavior

- Data engineers to ensure pipeline integrity

Encouraging cross-functional collaboration improves drift detection and recovery outcomes.

9. Educate Stakeholders on Drift and Its Impacts

Not everyone understands what drift is—or why it matters.

- Conduct internal workshops or training sessions.

- Include drift metrics in executive dashboards.

- Share case studies showing real business impacts from unaddressed drift.

Raising awareness creates a culture of model accountability.

10. Adopt MLOps Best Practices

Modern MLOps pipelines streamline every part of drift management.

- Automate model evaluation and monitoring

- Schedule retraining jobs with Airflow or Prefect

- Use tools like MLflow for experiment tracking and model registry

A strong MLOps culture ensures that handling drift isn’t reactive—it’s routine.

Conclusion: Keep Your Models Smarter, Longer

Data drift doesn’t come with warnings – but the impact can be huge. One day, your model works perfectly. The next, it’s making decisions based on patterns that no longer exist. That’s where awareness and action matter most.

At Symufolk, we know real-world data is always changing. That’s why we help businesses not just detect drift early, but stay ahead of it. With the right tools, regular checks, and smart retraining, your machine learning models can stay sharp, reliable, and aligned with your goals.

Because in AI, staying accurate isn’t luck – it’s strategy. And we’re here to help you get it right.

Perguntas frequentes

1. What is an example of concept drift?

A recommendation model trained on pre-pandemic travel behavior may fail when user interests change dramatically post-pandemic. This change in the relationship between user preferences (inputs) and suggested destinations (outputs) is a classic case of concept drift.

2. What is the difference between feature drift and data drift?

Feature drift refers to a change in the distribution of a specific input variable. Data drift refers to any shift in the overall input dataset. Feature drift is a subset of data drift, but data drift may involve multiple variables or more complex distributional changes.

3. What are the three types of drift?

- Covariate Drift – Change in input features’ distribution.

- Prior Probability Drift – Change in the distribution of target classes.

- Concept Drift – Change in the relationship between inputs and outputs.

4. How can concept drift and data drift impact a machine learning model?

When data drift or concept drift occurs, the model starts making predictions based on outdated or incorrect assumptions. This reduces accuracy, leads to flawed decisions, and can cost the business time, money, and trust. That’s why drift detection and model maintenance are critical in production ML systems.

5. How is drift monitoring different from model evaluation?

Model evaluation is periodic and offline. Drift monitoring is continuous and real-time, designed to detect changes as they happen.

6. Is drift always bad?

Not necessarily. Drift can reflect natural changes in the environment or user behavior. What matters is how quickly you detect and respond to it.