As machine learning continues to mature, organizations are no longer just experimenting with models—they’re deploying them at scale to solve real-world problems. However, deploying a model is not the end of the journey; it’s only the beginning. The real challenge lies in managing the lifecycle of machine learning models in production environments where reliability, scalability, and maintainability become paramount. This is where MLOps—Machine Learning Operations—plays a crucial role.

MLOps is a set of practices that brings together machine learning, software engineering, and DevOps to streamline the end-to-end process of developing, deploying, monitoring, and maintaining machine learning models. In this blog, we’ll walk through the key principles of MLOps, the best practices you should follow, and how to implement a robust and scalable MLOps pipeline for your AI projects.

What is MLOps and How Does it Differ from DevOps?

MLOps stands for Machine Learning Operations and can be thought of as the application of DevOps principles to machine learning workflows. While DevOps focuses on streamlining software development and deployment, MLOps is uniquely tailored to the challenges of building and maintaining ML models.

Unlike traditional software, ML models are not just based on code—they are heavily dependent on data. This means that the behavior of the model can change as the data changes. Moreover, the lifecycle of an ML model includes additional steps like data collection, feature engineering, model training, hyperparameter tuning, evaluation, and retraining. These steps introduce new complexities that DevOps alone does not address.

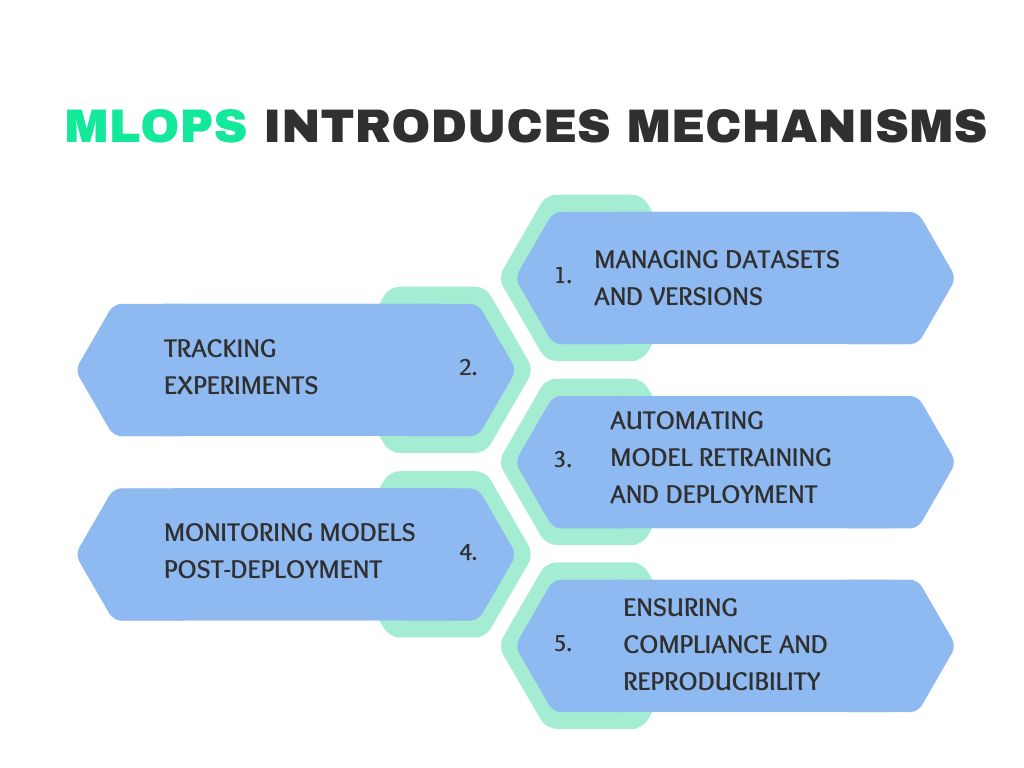

MLOps introduces mechanisms for:

- Managing datasets and versions

- Tracking experiments

- Automating model retraining and deployment

- Monitoring models post-deployment

- Ensuring compliance and reproducibility

MLOps bridges the gap between data science teams, DevOps engineers, and business stakeholders to ensure machine learning models are not just built but also maintained effectively over time.

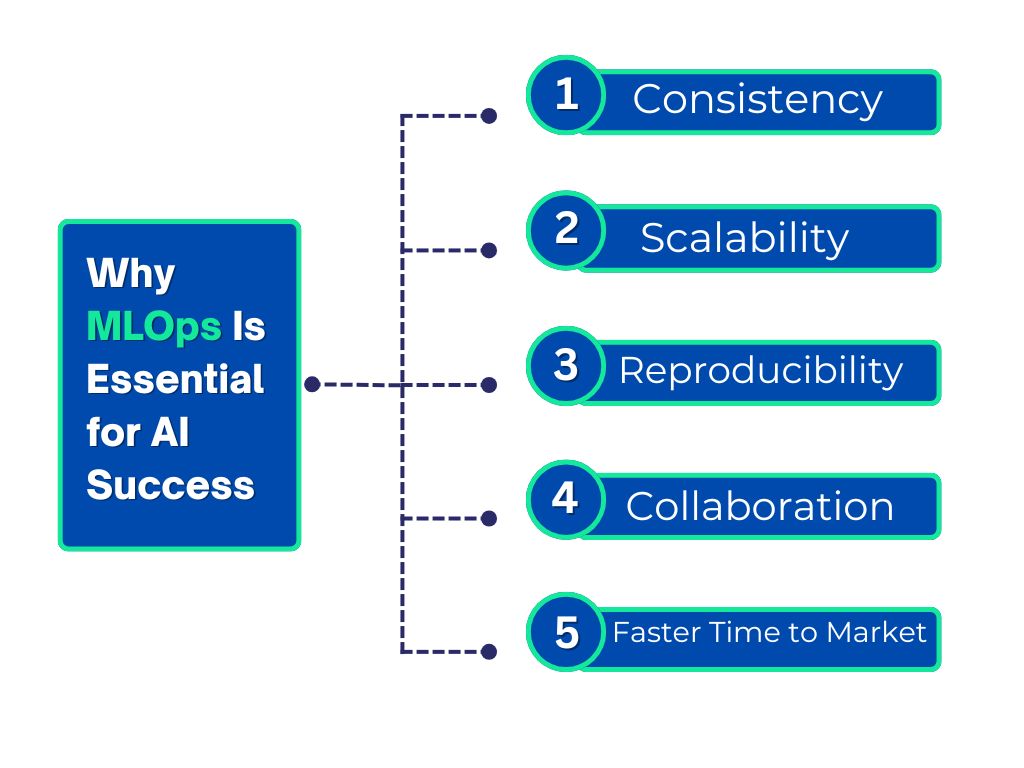

Why MLOps Is Essential for AI Success

Many organizations struggle to transition their ML projects from proof-of-concept to production. Without proper operational support, models become outdated, inaccurate, and unmanageable. MLOps offers a structured way to address these issues.

Here are some reasons why MLOps is critical:

- Consistency: By automating the development and deployment pipeline, MLOps ensures consistency across different environments—from development to production.

- Scalability: MLOps enables organizations to scale their AI initiatives across multiple teams and use cases.

- Reproducibility: With proper version control for code, data, and models, it becomes easy to reproduce past experiments and results.

- Collaboration: MLOps fosters collaboration between data scientists, engineers, and IT teams through standardized workflows and tools.

- Faster Time to Market: Automated pipelines reduce the time required to move models from development to production.

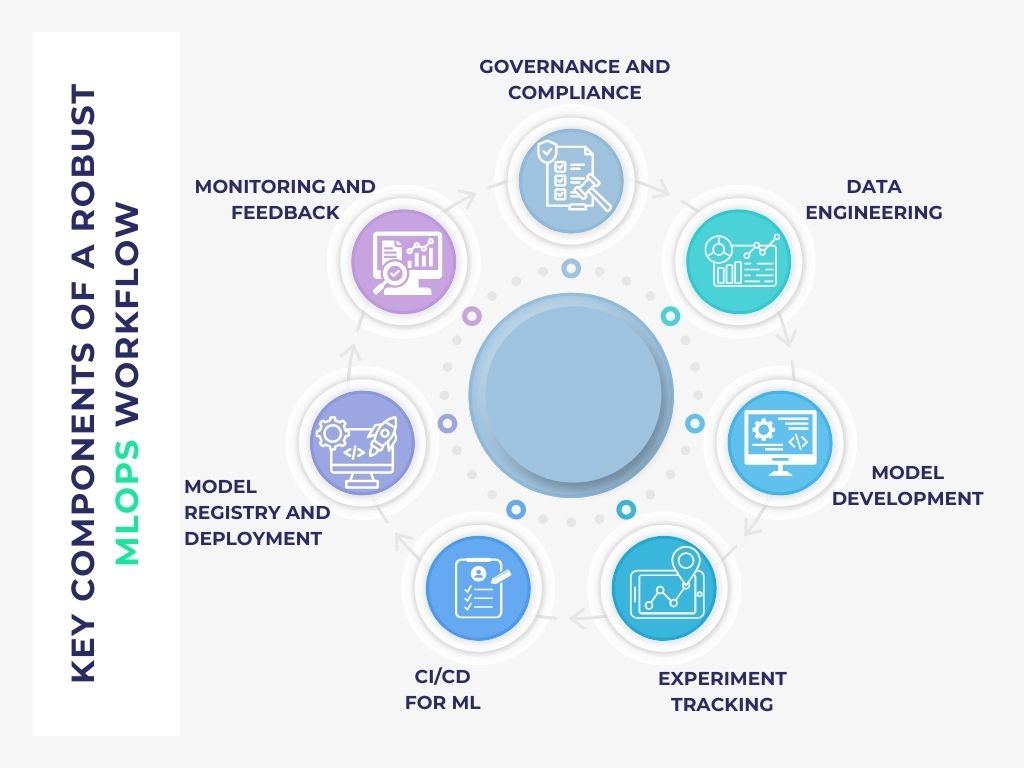

Key Components of a Robust MLOps Workflow

An effective MLOps framework encompasses several interconnected components that ensure end-to-end model lifecycle management:

- Data Engineering: This includes data collection, cleansing, transformation, and versioning. High-quality, reliable data is the foundation of any successful ML model.

- Model Development: Data scientists experiment with different algorithms, features, and hyperparameters to create accurate models.

- Experiment Tracking: Tools like MLflow or Weights & Biases help track experiments, log metrics, and compare model performance over time.

- CI/CD for ML: Continuous integration and continuous deployment pipelines ensure that models can be automatically tested and deployed with minimal manual intervention.

- Model Registry and Deployment: Once a model passes validation, it’s registered and deployed to a production environment where it can serve predictions.

- Monitoring and Feedback: After deployment, models must be monitored for performance degradation, drift, and anomalies.

- Governance and Compliance: Logging, auditing, and access control mechanisms ensure that organizations meet regulatory and business requirements.

MLOps Best Practices

To make the most of your MLOps investment, it’s essential to follow a set of best practices that promote efficiency, reliability, and transparency throughout the ML lifecycle.

1. Standardize Workflows Across Teams

Standardization helps streamline processes and improves collaboration. By using shared templates, naming conventions, and development guidelines, teams can more easily collaborate, onboard new members, and maintain a consistent model lifecycle.

2. Implement Version Control for Code, Data, and Models

One of the foundational principles of MLOps is version control. Just like software code, models and datasets evolve over time. Use tools like Git for source code, DVC for data versioning, and MLflow for tracking model versions. This ensures reproducibility and provides a history of changes.

3. Automate the ML Lifecycle with CI/CD

Automation is a key pillar of MLOps. Create CI/CD pipelines that automate:

- Data ingestion and preprocessing

- Model training and testing

- Model validation and evaluation

- Model deployment to production environments

Automation reduces the risk of human error, accelerates deployment cycles, and ensures consistent processes.

4. Monitor Models in Production

Even the most accurate models degrade over time. Monitor models continuously to detect issues like:

- Model drift: when the data used in production differs significantly from training data

- Performance degradation: drops in accuracy or increases in latency

- Infrastructure issues: server errors or response failures

Use monitoring tools like Prometheus, Grafana, and Evidently to set up alerts and dashboards.

5. Ensure Reproducibility and Environment Consistency

Reproducibility is essential for auditing and retraining. Use Docker containers to package code and dependencies, and tools like Conda or Pipenv to manage environments. This ensures that models behave the same way in development, testing, and production.

6. Encourage Collaboration Through Shared Tools

Break down silos between data scientists, engineers, and DevOps teams. Use collaboration platforms like GitHub, JupyterHub, and Slack integrations to maintain open communication. Clearly define roles and responsibilities using a RACI matrix (Responsible, Accountable, Consulted, Informed).

7. Validate Data and Model Inputs at Every Stage

Poor data quality leads to poor model performance. Implement validation checks to detect missing values, schema mismatches, and outliers. Automate these checks using tools like Great Expectations or Deequ.

8. Implement Model Explainability and Interpretability

As machine learning becomes more complex, understanding model decisions becomes critical. Use tools like SHAP and LIME to explain predictions, especially in sensitive domains like healthcare and finance. This builds trust among stakeholders and helps meet compliance requirements.

Security, Privacy, and Compliance in MLOps

Security is a core aspect of MLOps. ML pipelines often handle sensitive data, making it essential to integrate privacy and security practices into every stage.

Key practices include:

- Encrypting data both in transit and at rest

- Using role-based access control (RBAC)

- Maintaining audit logs for model access and predictions

- Anonymizing data to protect personally identifiable information (PII)

- Ensuring compliance with regulations like GDPR, HIPAA, and CCPA

Organizations must treat data governance as an ongoing responsibility, not a one-time task.

Choosing the Right Tools for MLOps

There are numerous tools available for different stages of the MLOps lifecycle. The right toolset depends on your use case, budget, team expertise, and infrastructure. Some popular tools include:

- MLflow, Weights & Biases (experiment tracking)

- Apache Airflow, Kubeflow (workflow orchestration)

- BentoML, TFX (model deployment)

- Prometheus, Grafana, Evidently (monitoring)

- DVC, LakeFS (data versioning)

A mix of open-source and cloud-native solutions often provides the best flexibility.

Real-World Examples of MLOps in Action

Organizations across industries are already using MLOps to drive real impact.

In healthcare, hospitals use MLOps pipelines to continuously update diagnostic models with new patient data, improving detection accuracy.

In e-commerce, recommendation engines retrain on fresh customer data weekly, boosting sales through personalized suggestions.

In finance, fraud detection models are monitored in real time for drift, allowing institutions to react quickly and reduce losses.

These examples highlight the power of MLOps to not only improve model performance but also align it with evolving business needs.

MLOps for Large Language Models and Generative AI

With the rise of large language models (LLMs) and generative AI, MLOps is evolving to support more complex workloads. This new frontier introduces challenges such as:

- Prompt versioning for consistency

- Managing long-running inference tasks

- Monitoring for hallucinations and toxic outputs

- Optimizing GPU usage

Emerging tools like Prompt Layer, LangChain, and LLMOps frameworks are being developed to meet these needs. Integrating these tools into existing MLOps pipelines ensures that generative models are scalable, controllable, and reliable.

Common Challenges in MLOps and How to Overcome Them

Despite its benefits, MLOps comes with its own set of challenges:

- High initial setup complexity

- Lack of team alignment and communication

- Tool fragmentation and integration issues

- Resistance to process standardization

The solution is to start small, iterate fast, and focus on delivering value. Adopt a phased approach that aligns with your organization’s maturity and goals.

A Sample MLOps Roadmap for Implementation

Here’s a simplified roadmap to guide your MLOps implementation:

Phase 1: Planning and Tool Selection

- Identify use cases and goals

- Choose tools that align with your infrastructure

Phase 2: Workflow Setup

- Establish pipelines for data ingestion, training, and deployment

Phase 3: Monitoring and Governance

- Set up performance monitoring and logging

- Define compliance and access policies

Phase 4: Continuous Improvement

- Automate retraining loops

- Refine processes based on feedback and metrics

Key Metrics to Track in MLOps

Monitoring the right metrics is essential for success. Focus on:

- Model accuracy, precision, recall, and F1 score

- Time to deployment (from development to production)

- Mean time to detect and resolve drift

- Prediction latency

- Retraining frequency

Tracking these metrics provides actionable insights for model maintenance and improvement.

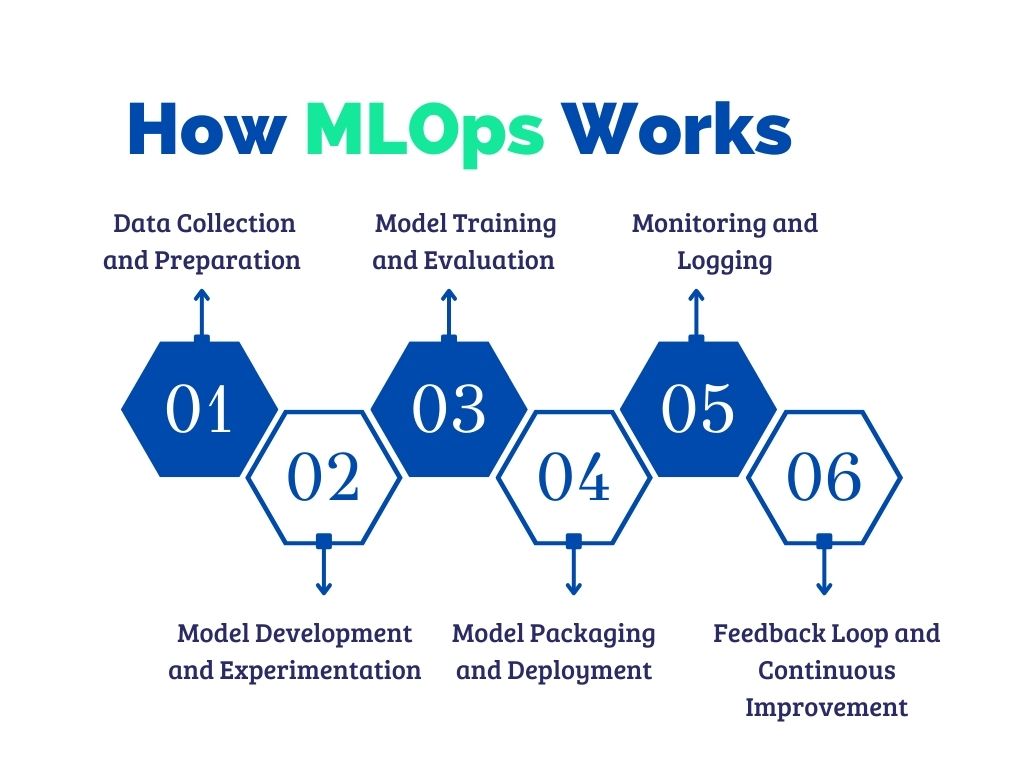

How MLOps Works

Understanding how MLOps works involves examining the full machine learning lifecycle and seeing how MLOps integrates automation, collaboration, and monitoring at each stage. The purpose of MLOps is to establish a repeatable, scalable process that transforms experimental models into reliable, production-ready systems.

Here’s a breakdown of how MLOps typically works across various phases of the ML workflow:

1. Data Collection and Preparation

Every ML project begins with data. In MLOps, this stage is formalized through automated pipelines that:

- Ingest raw data from multiple sources (e.g., databases, APIs, files)

- Perform preprocessing, cleaning, and transformation

- Apply data validation checks to ensure consistency

- Version the datasets for traceability and reproducibility

Tools like Apache Airflow, Azure Data Factory, or custom ETL scripts are often used in this stage. The resulting cleaned and validated data is stored in version-controlled repositories for downstream processes.

2. Model Development and Experimentation

In the development phase, data scientists experiment with different algorithms, feature sets, and hyperparameters to build a predictive model. With MLOps, this phase includes:

- Tracking experiments, parameters, and results using tools like MLflow or Weights & Biases

- Keeping training scripts and notebooks under version control

- Collaborating with peers through shared environments and reproducible setups (e.g., Docker containers)

MLOps ensures that every experiment is traceable, and results can be compared and reproduced accurately.

3. Model Training and Evaluation

After experimentation, selected models are trained on full datasets. This step often involves:

- Automated training scripts triggered by CI pipelines

- Use of GPU/TPU environments for accelerated learning

- Logging training metrics such as loss, accuracy, precision, recall

- Evaluating the model using validation datasets and performance thresholds

MLOps pipelines often validate models against predefined metrics and automatically decide whether the model qualifies for deployment.

4. Model Packaging and Deployment

Once validated, models are packaged into deployable artifacts (e.g., containers or serialized model files). The deployment process involves:

- Registering the model in a model registry (e.g., MLflow Registry, SageMaker)

- Deploying to a production environment (cloud, on-prem, or edge)

- Exposing the model through REST APIs or batch inference services

- Automating this step using CI/CD pipelines with tools like Jenkins, GitHub Actions, or Kubeflow

Deployment is made secure, repeatable, and scalable through infrastructure-as-code and containerization.

5. Monitoring and Logging

Post-deployment, MLOps emphasizes ongoing monitoring to ensure the model continues to perform as expected. Monitoring includes:

- Tracking input data and output predictions

- Measuring latency, throughput, and resource utilization

- Detecting data and concept drift

- Logging predictions and user feedback

Monitoring tools like Prometheus, Grafana, or commercial solutions like Fiddler and WhyLabs help automate this process and send alerts if anomalies are detected.

6. Feedback Loop and Continuous Improvement

MLOps closes the loop by using monitoring insights to trigger model retraining or rollback. This feedback loop is essential for:

- Updating models with fresh data

- Correcting model drift

- Incorporating user feedback or new business rules

- Ensuring continuous learning and adaptation

Some systems even integrate automated retraining pipelines that re-trigger the training step upon detecting drift or performance degradation.

The Future of MLOps

The future of MLOps will see increased automation, simplified tooling, and greater adoption across industries. Trends to watch include:

- AutoMLOps platforms that build pipelines with minimal code

- Serverless MLOps for rapid scaling

- Edge MLOps for deploying models on mobile and IoT devices

- Integrated support for LLMs and generative AI

As MLOps evolves, it will empower more organizations to unlock the full potential of machine learning at scale.

Conclusion

MLOps is no longer optional, it’s essential. By following best practices such as standardizing workflows, versioning everything, automating pipelines, and monitoring models, organizations can build scalable and reliable AI systems that deliver long-term value.

Whether you’re just getting started or looking to optimize your existing ML infrastructure, embracing MLOps will put you on the path to sustainable success in your AI journey.

If you’d like help designing or implementing your MLOps strategy, feel free to reach out. Our team specializes in building end-to-end ML pipelines tailored to your business goals.