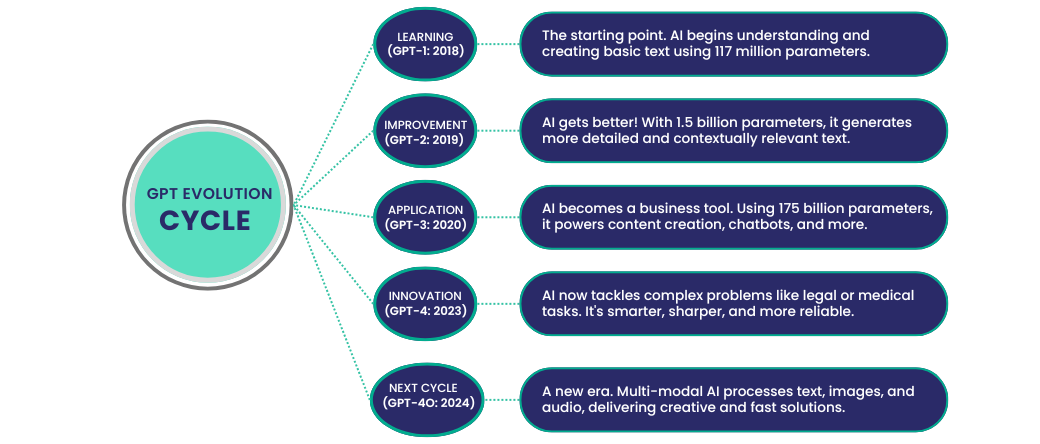

Large Language Models (LLMs) have opened up entirely new ways for humans to interact with technology, transforming ideas once found only in science fiction into everyday tools. When OpenAI introduced its first conversational AI—marking a pivotal point in the evolution of large language model history—it ignited a rapid surge of progress that continues to this day.

From ChatGPT-1 to GPT-4, these models have steadily refined their mechanisms, tackled notable limitations, and showcased remarkable achievements in machine learning models, natural language generation, and AI language processing. Together, these advancements pave the way for AI that is not only more powerful, but also increasingly intuitive and accessible in our daily lives.

What Are Large Language Models (LLMs)?

LLMs are advanced AI language models designed to understand and generate human-like text. Built on an ai model architecture powered by deep learning in NLP, these openAI GPT models sift through massive amounts of data to:

- Identify patterns in language

- Generate coherent text based on context

- Adapt to various tasks with minimal input

With billions of parameters, LLMs use specialized training language model techniques and diverse datasets, enabling them to handle a wide variety of applications—from answering questions to crafting engaging content. Over time, these ongoing developments highlight the consistent LLM performance improvements that drive modern AI forward.

ChatGPT-1: The First Step

Imagine stepping into the world of conversational AI for the first time with ChatGPT-1, which laid a solid foundation by introducing core chatgpt capabilities. This model leveraged the transformer architecture introduced by Google in 2017, using unsupervised learning on massive datasets and relying on tokens to predict text sequences based on patterns.

Capabilities

- Basic conversational skills

- Ability to answer straightforward questions

- Simple sentence generation based on context

Limitations

- Limited handling of nuanced queries

- Frequent irrelevant or repetitive responses

- Trouble maintaining coherence in longer conversations

Even with these challenges, ChatGPT-1 demonstrated the promise of open-ai chatgpt, revealing both its early strengths and areas for growth in ai-driven language models.

ChatGPT-2: Scaling Up

ChatGPT-2 represented a major leap in the chatgpt evolution timeline, thanks to its increased parameters and more diverse dataset. With 1.5 billion parameters, it gained stronger contextual understanding and produced higher-quality text, marking a significant milestone in the progress of large language models.

Capabilities

- Enhanced skill in completing paragraphs and crafting longer pieces

- Improved grammar and vocabulary

- Better comprehension of context

Limitations

- Tendency to generate misleading or biased information

- Lack of deep understanding, often leading to confident yet incorrect answers

- No built-in safeguards to block inappropriate outputs

While ChatGPT-2 showcased notable advancements in generative models for NLP, it also highlighted the need for ethical deployment and stronger safety mechanisms.

ChatGPT-3: The Game Changer

ChatGPT-3 marked a true paradigm shift in AI language model development, boasting 175 billion parameters. By incorporating few-shot and zero-shot learning techniques, it became capable of handling tasks it hadn’t explicitly been trained on—further highlighting the gpt model comparison across different versions.

Capabilities

- Excels in diverse tasks, from coding to creative writing

- Generates text that often appears near-human

- Adapts well to specific prompts and instructions

Limitations

- Computational demands make it expensive to deploy

- Prone to long-winded or overly generic responses

- Faces ethical challenges around bias and misinformation

By delivering groundbreaking performance, ChatGPT-3 solidified OpenAI’s dominance in generative AI models, while emphasizing the ongoing need for refined fine-tuning and robust safety measures.

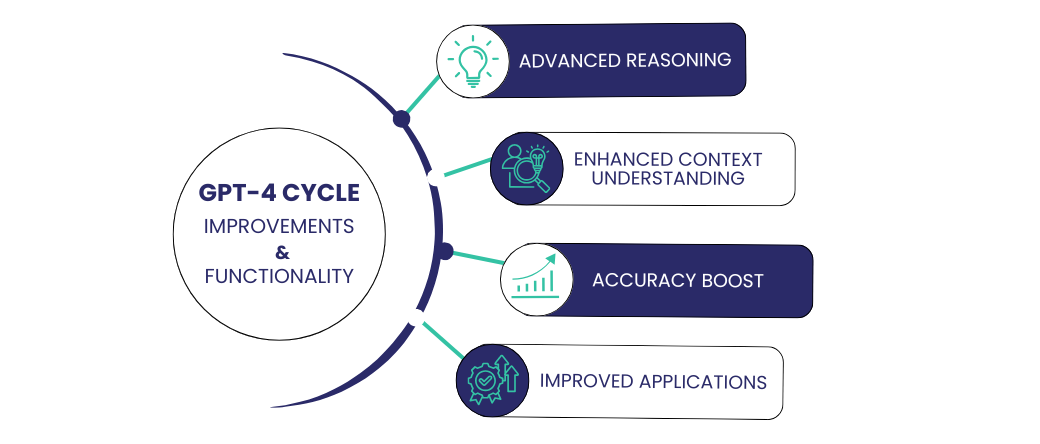

GPT-4: The Pinnacle of Progress

GPT-4 stands as the culmination of lessons learned from its predecessors—truly exemplifying the gpt 1 vs gpt 2 vs gpt 3 vs gpt 4 comparisons. With an advanced architecture and larger datasets, this version emphasizes efficiency, safety, and adaptability. By integrating Reinforcement Learning from Human Feedback (RLHF) more effectively, it achieves notable GPT-4 improvements in both accuracy and relevance.

Capabilities

- Multimodal functionality, interpreting both text and images (chatgpt 4 features)

- Advanced reasoning and enhanced contextual comprehension

- Customizable tone and style for a variety of applications

- Stronger safeguards to minimize harmful or biased outputs

Limitations

- High resource requirements for training and inference

- Occasional gaps in reasoning, especially for highly specialized topics

- Reliance on training data, which can still introduce subtle biases

As a result, GPT-4 sets new benchmarks in AI advancements in 2025 and beyond, delivering unparalleled versatility and unlocking transformative use cases across education, healthcare, business, and more.

Applications Across Industries

The evolution of LLM has driven groundbreaking applications across various industries:

- Healthcare: Assisting in patient diagnostics, generating medical reports, and providing mental health support.

- Education: Creating personalized learning plans, tutoring, and content generation for courses.

- Business: Streamlining customer support, generating marketing content, and analyzing large datasets.

- Creative Industries: Writing scripts, creating art, and brainstorming ideas.

These applications illustrate how large language models transform workflows and drive innovation, paving the way for natural language processing to flourish across multiple domains.

Ethical Considerations in LLM Development

The remarkable capabilities of AI language processing also bring ethical challenges:

- Bias: Training data can introduce and perpetuate biases.

- Misinformation: LLMs can generate confident but incorrect or misleading information.

- Privacy: Handling sensitive data raises concerns about security and confidentiality.

Addressing these challenges requires vigilant monitoring, robust guardrails, and transparent practices to ensure responsible AI use.

How LLMs Work: A Simplified Overview

LLMs use transformer architectures, excelling in handling sequential data like text. These models predict the next word in a sequence based on the context of preceding words. As they scale, they rely on vast datasets and computational power to refine their abilities to:

- Understand context.

- Generate coherent and relevant responses.

- Learn new tasks with minimal examples.

Each generation of ChatGPT history has introduced enhancements that expand the boundaries of what LLMs can achieve.

Future of Large Language Models

The evolution of large language models is far from over. Future advancements will likely focus on:

- Energy Efficiency: Reducing the environmental impact of machine learning models.

- Explainability: Making AI decisions more transparent and interpretable.

- Personalization: Tailoring models to individual needs without compromising privacy.

As these developments unfold, LLMs will continue to redefine how humans interact with technology, creating opportunities for innovation across sectors—further driving LLM performance improvements.

Conclusion: A Future of Possibilities

The journey from ChatGPT-1 to GPT-4 showcases exponential growth in AI-driven language models. However, with great power comes great responsibility. Developers and users must navigate the challenges of bias, resource consumption, and ethical deployment in training language models.

As we look ahead, the evolution of language models promises even more transformative applications. Whether you’re leveraging these technologies for personal use, business innovation, or research, understanding their capabilities and limitations is key to unlocking their full potential.

Ready to explore how GPT-4 can revolutionize your workflows or bring your ideas to life? Let’s discover the possibilities together!

FAQs

1. What is a Large Language Model (LLM)?

An LLM is an AI system trained on massive datasets to understand and generate human-like text. It uses transformer architectures to predict words based on context, enabling diverse applications like content generation, customer support, and coding assistance in generative AI models.

2. How does GPT-4 differ from GPT-3?

GPT-4 has advanced multimodal capabilities, allowing it to understand both text and images (chatgpt 4 features). It also integrates stronger safety measures, improved reasoning, and more refined adaptability compared to GPT-3—highlighting gpt model comparison.

3. What are the limitations of GPT-4?

Despite its advancements, GPT-4 requires high computational resources, can occasionally produce incorrect reasoning for niche topics, and remains dependent on its training data—leading to possible subtle biases in natural language processing.

4. How can LLMs be used ethically?

Ethical use of LLMs involves addressing bias, preventing misuse, protecting privacy, and ensuring transparency in applications. Regular monitoring and robust safety measures are crucial to maintain AI language processing integrity.

5. What industries benefit most from LLMs?

Industries like healthcare, education, business, and creative sectors benefit greatly, leveraging LLMs for tasks such as diagnostics, personalized learning, content creation, and data analysis—validating the chatgpt capabilities and driving AI advancements in 2025 and beyond.