As artificial intelligence (AI) continues to evolve, the way machine learning (ML) models are trained has also seen significant advancements. Two emerging approaches that enable efficient model training on large datasets are Distributed Machine Learning (DML) and Federated Learning (FL).

Both methods aim to train ML models across multiple devices or nodes, but they differ in their data distribution strategies, privacy considerations, and computational approaches. Understanding their differences is crucial for businesses, researchers, and AI engineers who need to decide the best method for scalable, secure, and efficient AI training.

This blog explores how Distributed Machine Learning and Federated Learning work, their key differences, advantages, challenges, and use cases to help you determine which approach is best suited for your AI needs.

What is Distributed Machine Learning?

Definition and Core Concepts

Distributed Machine Learning (DML) refers to a method where large-scale ML models are trained across multiple machines, clusters, or cloud environments. The goal is to speed up model training and handle large datasets efficiently by distributing computational workloads across multiple processing units.

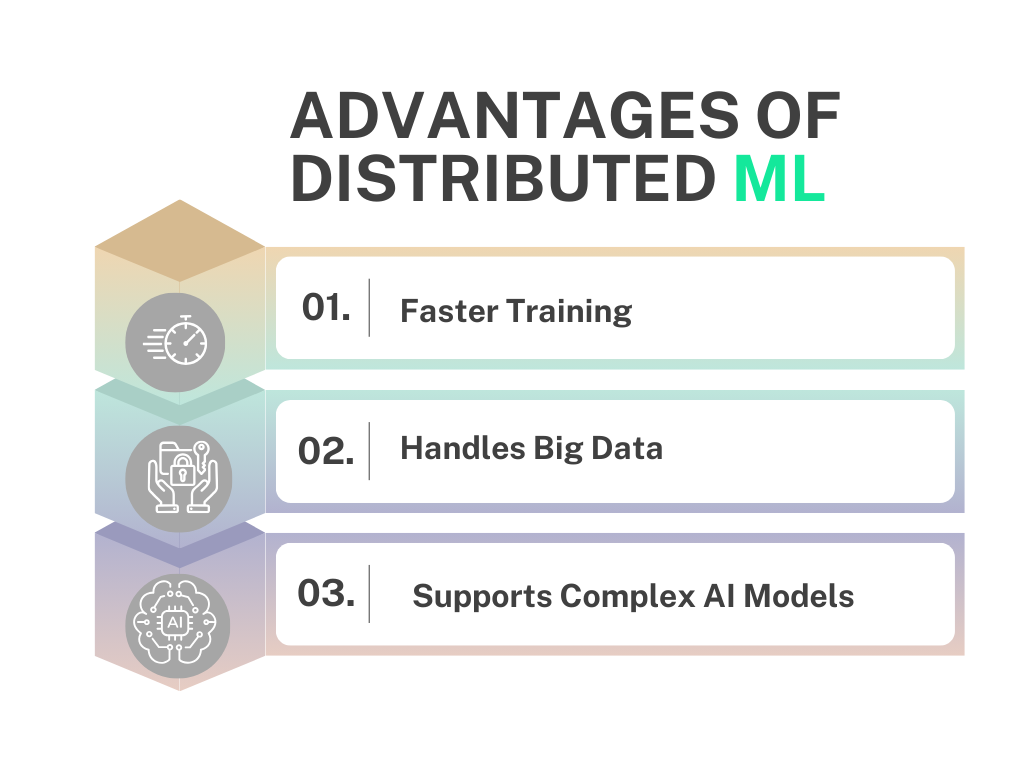

Advantages of Distributed ML

- Faster Training: Distributed computing allows large ML models to be trained efficiently by splitting workloads.

- Handles Big Data: Ideal for AI applications that require processing massive datasets.

- Supports Complex AI Models: Can train deep learning models that require extensive computational power.

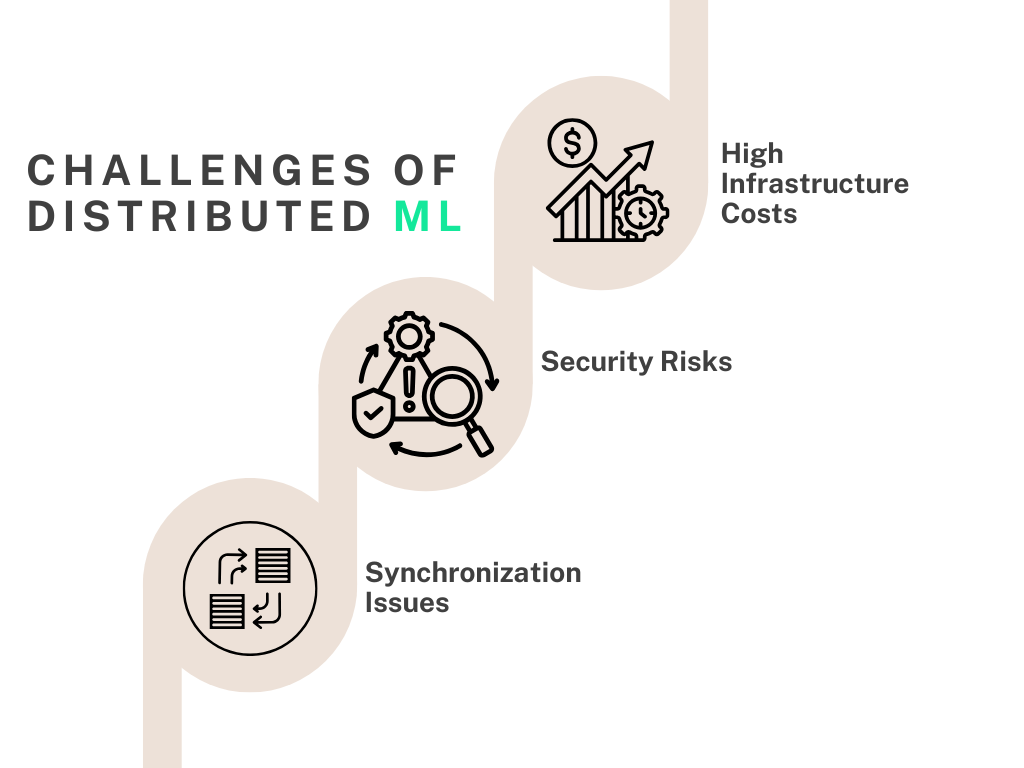

Challenges of Distributed ML

- High Infrastructure Costs: Requires powerful servers, GPUs, and network bandwidth.

- Security Risks: Data is often stored centrally, increasing the risk of breaches.

- Synchronization Issues: Model updates across distributed nodes must be carefully managed to avoid inconsistencies.

What is Federated Learning?

Definition and Key Principles

Federated Learning (FL) is a decentralized machine learning approach where models are trained directly on edge devices without sharing raw data. This technique is designed to enhance privacy, reduce latency, and minimize data transfer requirements.

Benefits of Federated Learning

- Privacy-Preserving: Since raw data remains on user devices, FL minimizes data security risks.

- Reduces Bandwidth Usage: Only model updates (not the full dataset) are shared, reducing communication overhead.

- Improves Personalization: Enables AI applications to learn from user behavior while keeping data private.

Limitations of Federated Learning

- Limited Computational Power: Edge devices may lack the necessary processing power for complex ML models.

- Network Connectivity Issues: Requires stable communication for effective model updates.

- Potential for Biased Models: Since data remains on local devices, there is a risk of training on biased or incomplete datasets.

Key Differences Between Distributed ML and Federated Learning

| Factor | Distributed Machine Learning | Federated Learning |

| Data Storage | Centralized (stored in cloud or servers) | Decentralized (data remains on devices) |

| Privacy Considerations | Higher risk, data must be secured centrally | More privacy-friendly, raw data never leaves the device |

| Training Method | Data is shared across nodes for model training | Model is trained on local devices, only updates are shared |

| Computational Efficiency | Requires high-performance infrastructure | Leverages edge devices but may be computationally limited |

| Use Cases | Large-scale AI models, big data processing | Privacy-sensitive applications (healthcare, finance, IoT) |

Use Cases and Real-World Applications

Both Distributed ML and Federated Learning have unique use cases based on their architecture and benefits.

Distributed ML in Large-Scale AI Models

- Autonomous Vehicles: Training deep learning models on traffic data across multiple cloud servers.

- Financial Forecasting: Processing and analyzing real-time transaction data to detect fraud.

- Healthcare AI: Developing diagnostic models using large hospital datasets.

Federated Learning in Privacy-Centric Industries

- Smartphones & IoT: AI-powered voice assistants like Google Assistant and Siri use FL to learn from user interactions without compromising privacy.

- Healthcare & Medical Research: Hospitals can train ML models on patient records without sharing sensitive data.

- Personalized Recommendations: Apps like Gboard and Netflix use FL to improve user experience without transferring raw data.

How Companies are Leveraging Both Approaches

- Google employs Federated Learning in Android devices for privacy-first AI.

- OpenAI and Microsoft use Distributed ML for large-scale AI model training.

- Facebook integrates both approaches for real-time recommendation algorithms.

Challenges and Future Trends

Security and Privacy Risks in Federated Learning

- Data Leakage through Model Updates: While raw data isn’t shared, hackers could still infer information from model gradients.

- Potential for Poisoning Attacks: Malicious users could manipulate local model updates, affecting the global model.

Infrastructure and Cost Challenges in Distributed ML

- Expensive Hardware & Cloud Costs: Running distributed ML at scale requires high-performance GPUs and cloud resources.

- Synchronization Delays: Keeping all nodes in sync during model training can lead to bottlenecks.

Future of AI Training: Hybrid Models and Innovations

- AI frameworks that combine Federated Learning with Distributed ML will help businesses balance privacy and computational efficiency.

- Edge AI will continue to evolve, making Federated Learning more feasible for real-time applications.

- Decentralized AI networks will emerge, reducing reliance on cloud-based ML models.

How Distributed Machine Learning and Federated Learning Work

Both Distributed Machine Learning (DML) and Federated Learning (FL) are designed to train machine learning models across multiple devices or servers. However, they operate differently based on how they handle data, model updates, and computation.

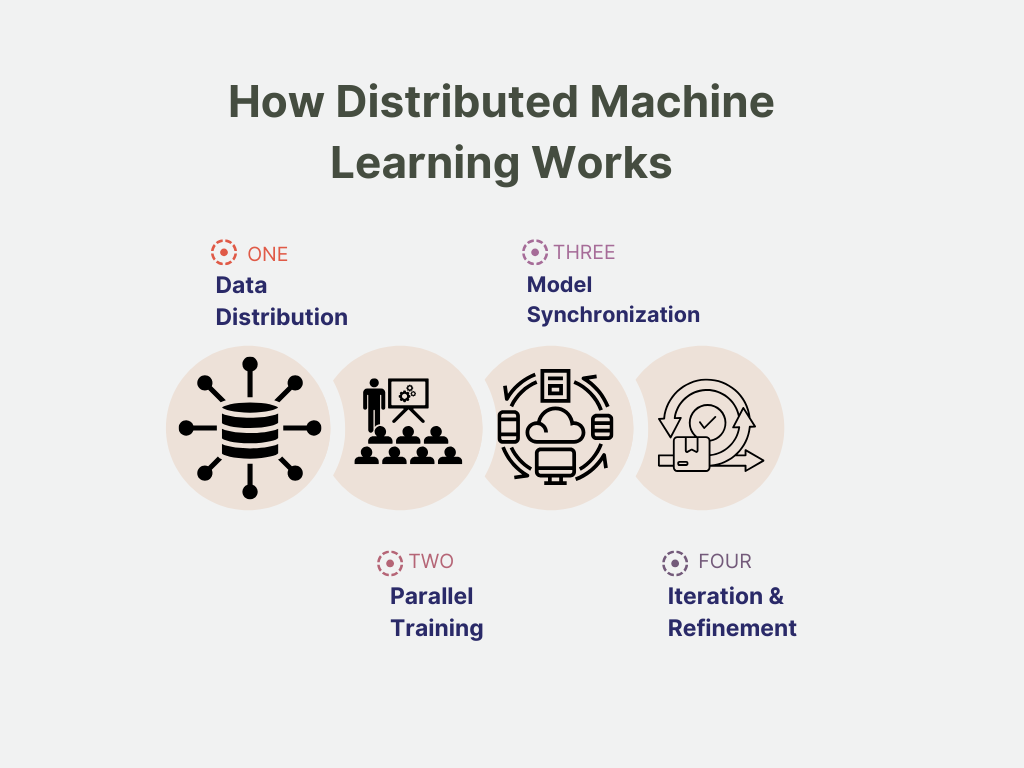

How Distributed Machine Learning Works

Distributed ML splits data and model training tasks across multiple machines to speed up computation and enable large-scale AI model development. Here’s how it works:

- Data Distribution: The dataset is divided into multiple parts and stored across different machines or cloud servers.

- Parallel Training: Each machine (node) processes its share of the data and trains a local version of the model.

- Model Synchronization: Trained models from different nodes are periodically merged into a central model.

- Iteration & Refinement: The central server updates the model, and the process repeats until the model reaches the desired accuracy.

Example: AI models for fraud detection in banking analyze transaction data from multiple servers, ensuring a high-performing model without overwhelming a single system.

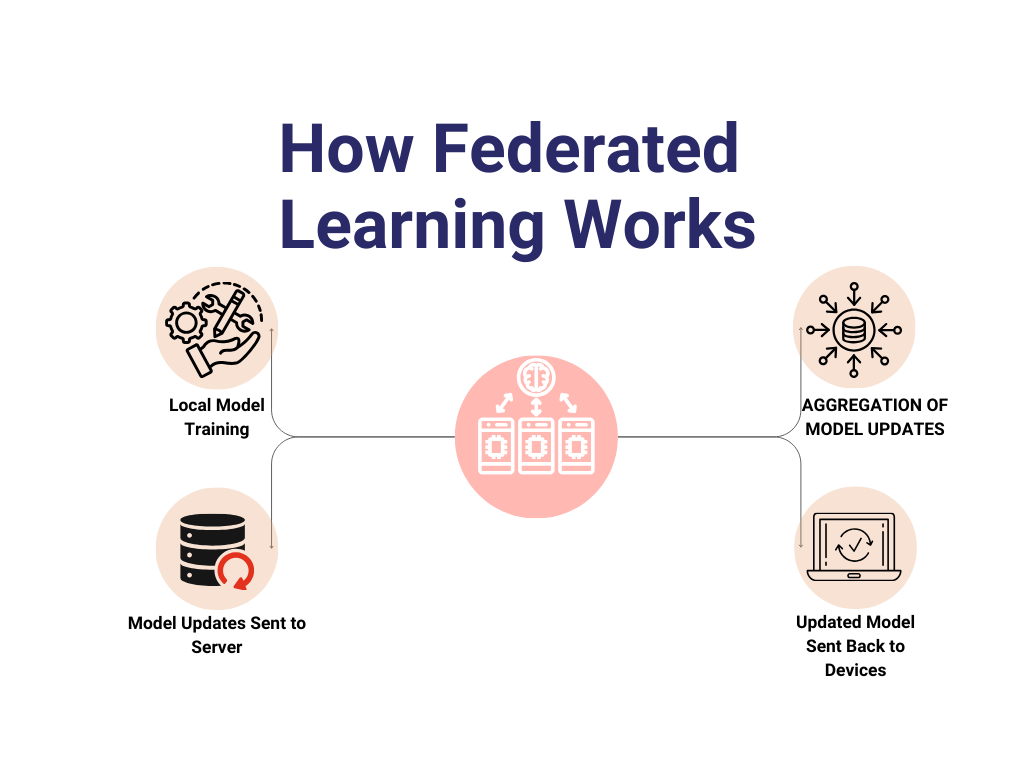

How Federated Learning Works

Federated Learning operates differently by keeping data on user devices and only sharing model updates to maintain privacy. The process follows these steps:

- Local Model Training: AI models are trained directly on user devices (e.g., smartphones, IoT devices, or edge computing systems).

- Model Updates Sent to Server: Instead of sending raw data, only model updates (gradients) are shared with a central aggregator.

- Aggregation of Model Updates: The central server combines updates from multiple devices to refine the global AI model.

- Updated Model Sent Back to Devices: The improved model is distributed back to the devices, continuously improving AI performance without exposing private data.

Example: Google uses Federated Learning for its Gboard keyboard, where the model learns from users’ typing habits without sending their data to the cloud, ensuring privacy while improving predictive text accuracy.

Key Differences in How They Work

| Feature | Distributed ML | Federated Learning |

| Data Location | Stored across multiple servers | Stays on user devices |

| Computation | Runs on cloud or cluster nodes | Runs on local devices (smartphones, IoT) |

| Model Synchronization | Centralized server merges updates | Model updates are aggregated securely |

| Use Case | Large-scale AI models in cloud computing | Privacy-first applications like healthcare, mobile apps |

Conclusion: Choosing the Right Approach for Your AI Needs

Both Distributed Machine Learning and Federated Learning offer unique advantages based on the type of AI model, data privacy concerns, and computational infrastructure.

- If your goal is large-scale AI model training with high computing power, Distributed ML is the best approach.

- If privacy and decentralized learning are priorities, Federated Learning is the preferred choice.

As AI continues to evolve, hybrid approaches combining both Distributed ML and Federated Learning will become more common, enabling organizations to build scalable, privacy-aware, and efficient AI models.

Frequently Asked Questions (FAQs)

- What is the main difference between Distributed Machine Learning and Federated Learning?

Distributed Machine Learning (DML) involves training AI models across multiple servers where data is split and shared for processing. In contrast, Federated Learning (FL) trains models on user devices and only shares model updates, ensuring better privacy and security.

- Which approach is better for privacy-sensitive applications?

Federated Learning is better for privacy-sensitive applications since raw data remains on local devices, reducing the risk of data breaches. This makes it ideal for healthcare, finance, and mobile applications where user data security is critical.

- What are the major challenges in Distributed Machine Learning?

The key challenges in Distributed ML include:

- High computational costs due to infrastructure requirements.

- Synchronization issues when merging model updates.

- Security risks since data is stored on centralized servers.

- Can Federated Learning work with deep learning models?

Yes, but Federated Learning is more suited for lightweight models since training happens on user devices with limited computational power. Deep learning models typically require distributed cloud-based computing for scalability.

- What are some real-world applications of Distributed ML and Federated Learning?

- Distributed ML is used in autonomous vehicles, large-scale financial forecasting, and cloud-based AI models.

- Federated Learning is used in smartphones (Google Gboard), IoT devices, and personalized recommendation systems where user data privacy is a priority.

- Which AI training method should businesses choose?

- If your focus is scalability and high-performance computing, Distributed ML is the best option.

- If your goal is privacy-preserving AI with decentralized learning, Federated Learning is the right choice.

Some businesses combine both approaches for a hybrid AI strategy to balance performance and privacy.