As Artificial Intelligence (AI) continues to transform industries, CTOs face a critical decision: “where should AI workloads run?” The choice between Edge AI and Cloud AI is pivotal, with each offering unique advantages and challenges for AI scalability. This blog explores the differences between Edge computing vs Cloud AI, delving into their architectures, use cases, benefits, and limitations to help CTOs make informed decisions when building AI infrastructure at scale.

What is Edge AI?

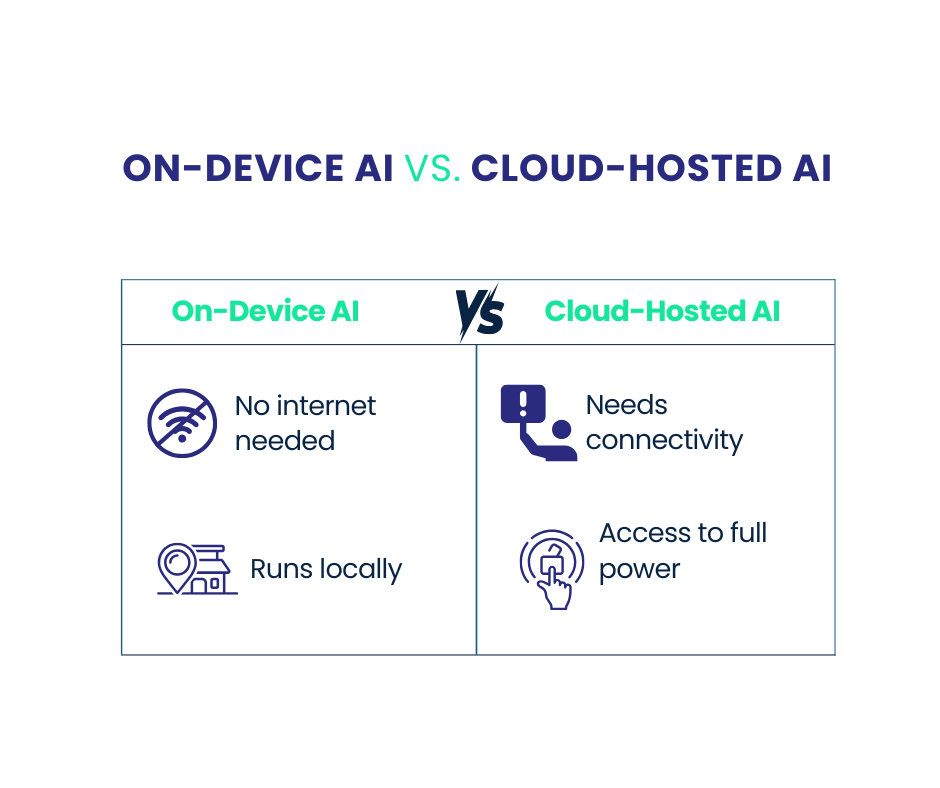

Edge AI refers to the deployment of AI models directly on devices at the edge of the network, such as IoT devices, smartphones, or edge servers, rather than relying on centralized cloud infrastructure. This approach, also known as on-device AI vs cloud AI, enables data processing closer to the source, reducing latency and dependency on internet connectivity.

Edge AI architecture typically involves lightweight machine learning models optimized for resource-constrained environments. These models run on devices with limited computational power, leveraging edge computing for machine learning to perform tasks like real-time analytics, image recognition, or natural language processing without constant cloud communication.

Understanding Cloud AI

Cloud-based AI, or Cloud AI, involves running AI workloads on centralized cloud servers. These servers, hosted by providers like AWS, Google Cloud, or Azure, offer vast computational resources, enabling complex model training and inference at scale. Cloud AI is ideal for applications requiring significant processing power, large datasets, or seamless integration with other cloud services.

However, Cloud AI limitations include dependency on internet connectivity, potential latency issues, and concerns about data privacy and compliance, especially for sensitive industries like healthcare or finance.

Edge AI vs Cloud AI: A Detailed Comparison

To choose between Edge AI and Cloud AI, CTOs must understand their core differences:

|

Aspect |

Edge AI |

Cloud AI |

| Processing Location | On-device or edge servers, close to data source | Centralized cloud servers |

| Latency | Low (near real-time) due to local processing | Higher due to data transmission over networks |

| قابلية التوسع | Limited by device hardware; scales with device deployment | Near-infinite, leveraging cloud infrastructure |

| Connectivity | Operates offline or with intermittent connectivity | Requires stable internet access |

| خصوصية البيانات | Enhanced, as data stays local | Potential risks during data transmission and cloud storage |

| Compute Power | Constrained by edge device capabilities | High, with access to GPUs, TPUs, and distributed systems |

| Cost | Upfront hardware costs; lower bandwidth expenses | Recurring costs for compute, storage, and data transfer |

| Maintenance | Distributed device management; updates can be complex | Centralized management; easier updates and monitoring |

Benefits of Edge AI

Edge AI offers several advantages that make it appealing for specific use cases:

- Low Latency: By processing data locally, Edge AI minimizes delays, enabling real-time decision-making for applications like autonomous vehicles or industrial automation.

- Reduced Bandwidth Costs: Since data is processed at the edge, less information needs to be sent to the cloud, lowering bandwidth expenses.

- Enhanced Privacy: Edge AI keeps sensitive data on-device, reducing the risk of breaches during transmission and ensuring compliance with regulations like GDPR.

- Offline Functionality: Edge AI operates without constant internet access, making it ideal for remote or unstable network environments.

- Energy Efficiency: Optimized models on edge devices consume less power compared to continuous cloud communication.

Cloud AI Limitations

While Cloud AI is powerful, it has notable drawbacks:

- Latency Issues: Data transmission to and from the cloud can introduce delays, making Cloud AI less suitable for time-sensitive applications.

- Connectivity Dependence: Cloud AI requires reliable internet, which can be a bottleneck in remote areas or during network outages.

- Data Privacy Risks: Transferring sensitive data to the cloud raises security and compliance concerns, especially in regulated industries.

- Cost at Scale: While Cloud AI offers scalability, the costs of storage, compute, and data transfer can escalate with large-scale deployments.

- Single Point of Failure: Centralized cloud infrastructure can be vulnerable to outages or cyberattacks, impacting AI services.

Edge AI Use Cases

Edge AI shines in scenarios where low latency, privacy, or offline capabilities are critical. Key Edge AI use cases include:

- Autonomous Vehicles: Edge AI enables real-time object detection and decision-making, critical for safe navigation without relying on cloud connectivity.

- Smart Manufacturing: Edge computing for machine learning supports predictive maintenance and quality control by analyzing sensor data on factory floors.

- Healthcare Devices: Wearables and medical devices use Edge AI to process patient data locally, ensuring privacy and enabling real-time monitoring.

- Retail: Smart cameras with Edge AI analyze customer behavior in stores, optimizing layouts and inventory without sending video feeds to the cloud.

- Smart Cities: Traffic management systems use Edge AI to process data from sensors and cameras, optimizing flow in real time.

Cloud AI Use Cases

Cloud AI is best suited for applications requiring significant computational resources or centralized data management:

- Large-Scale Model Training: Cloud AI leverages powerful GPUs and TPUs to train complex models, such as those used in natural language processing or image generation.

- Big Data Analytics: Cloud platforms process massive datasets for business intelligence, fraud detection, or customer segmentation.

- AI-as-a-Service: Platforms like Google’s Vertex AI or AWS SageMaker provide pre-built models and APIs, simplifying development for businesses.

- Collaborative AI: Cloud AI enables teams to access shared models and data, ideal for distributed development environments.

Edge AI Architecture: Building for Scale

Designing an Edge AI architecture requires careful consideration of hardware, software, and deployment strategies:

- Hardware: Edge devices, such as NVIDIA Jetson or Raspberry Pi, must balance computational power with energy efficiency. Specialized chips like TPUs or NPUs can accelerate AI tasks.

- Model Optimization: Techniques like quantization, pruning, and knowledge distillation reduce model size and complexity for edge deployment.

- Data Management: Edge AI systems must handle local storage and periodic synchronization with the cloud for updates or analytics.

- Security: Edge devices need robust encryption and secure boot mechanisms to protect against tampering.

For AI infrastructure at scale, CTOs may adopt a hybrid approach, combining Edge AI for real-time tasks and Cloud AI for training and analytics. For example, an Edge AI model on a smart camera can detect anomalies, while the cloud aggregates data for long-term trends.

Scaling Considerations for CTOs

When scaling AI, CTOs must weigh the following factors:

- Performance Requirements: If low latency is critical, Edge AI is preferable. For compute-intensive tasks, Cloud AI is the better choice.

- Cost Management: Edge AI reduces bandwidth costs but requires investment in edge hardware. Cloud AI involves recurring costs for compute and storage.

- Data Sensitivity: Industries with strict privacy regulations may favor Edge AI to minimize data exposure.

- Infrastructure Complexity: Cloud AI simplifies hardware management but introduces network dependencies. Edge AI requires distributed device management.

- Scalability Needs: Cloud AI offers near-infinite scalability, while Edge AI may require careful planning to deploy across thousands of devices.

Hybrid Approach: The Best of Both Worlds

For many organizations, a hybrid Edge AI vs Cloud AI strategy optimizes performance and cost. Edge AI handles real-time, privacy-sensitive tasks, while Cloud AI manages training, updates, and analytics. For example, a smart home device might use Edge AI for voice recognition and Cloud AI for natural language understanding when the internet is available.

Conclusion

Choosing between Edge AI and Cloud AI depends on the specific needs of your organization. Edge AI excels in low-latency, privacy-focused, and offline scenarios, while Cloud AI is ideal for compute-intensive tasks and centralized data management. By understanding the benefits of Edge AI, Cloud AI limitations, and the nuances of Edge computing vs Cloud AI, CTOs can design an AI infrastructure at scale that aligns with their business goals. A hybrid approach often provides the flexibility to leverage both, ensuring robust and efficient AI deployments.

Start Building a Smarter AI Framework — اتصل بنا for a Custom Assessment.

FAQs:

1. What is the key difference between Edge AI and Cloud AI?

Edge AI runs on local devices near the data source, while Cloud AI relies on centralized cloud servers. This affects latency, connectivity needs, and data privacy.

2. Which option offers lower latency for real-time processing?

Edge AI delivers faster response times by processing data locally, making it ideal for time-sensitive applications like autonomous systems or industrial automation.

3. Is Cloud AI more scalable than Edge AI?

Yes. Cloud AI offers virtually unlimited scalability using powerful infrastructure like GPUs and TPUs. Edge AI requires scaling hardware across devices, which adds complexity.

4. How does Edge AI enhance data privacy?

Edge AI processes data on-device, minimizing transmission to the cloud. This reduces exposure and helps comply with privacy regulations like GDPR or HIPAA.

5. Can Edge AI work without an internet connection?

Yes. Edge AI is designed to function offline or with intermittent connectivity, making it ideal for remote locations or mission-critical systems.

6. Should I choose Edge AI, Cloud AI, or a hybrid approach?

It depends on your use case. For real-time, privacy-focused tasks, Edge AI is ideal. For heavy computation and centralized analytics, Cloud AI is better. Most organizations benefit from a hybrid model.