Your company invests months building a machine learning model. It launches, everyone celebrates, and you see impressive business results. Fast forward a few months, the initial performance starts to fade. Customer complaints rise, forecasts seem off, and manual fixes multiply.

The model is still running. There are no system errors. So what’s happening?

Welcome to the world of drift.

Most machine learning failures aren’t due to bugs or outages—they happen quietly, as the world moves on and your AI stays stuck in yesterday’s reality. The solution is not more development, but smarter oversight: drift monitoring.

Drift monitoring isn’t just about technical “health checks.” It’s about business resilience, customer trust, and ongoing value. In this guide, we’ll take you from drift basics to advanced monitoring, revealing how smart companies keep their AI relevant and reliable—no matter how fast things change.

What is Drift Monitoring? (Why Modern AI Can’t Ignore It)

Drift monitoring is the continuous process of observing your machine learning models, input data, and outcomes to detect when something has changed—before that change hurts your business.

Unlike traditional software, machine learning models learn from historical data—but data evolves. Drift monitoring lets you catch those changes in real time, providing early warnings and actionable insights.

The Role of Drift Monitoring in ML Operations

- Proactive Protection: Instead of discovering issues after the fact (through missed KPIs or angry users), drift monitoring flags problems as soon as they emerge.

- Transparency for All: Regulators, executives, and customers increasingly demand accountability for automated decisions. Drift reporting and audit trails offer peace of mind.

- Continuous Improvement: By detecting when and where models break, teams gain invaluable lessons to guide future feature engineering, retraining, and deployment.

The Business Case: From Insurance to Competitive Advantage

Think of drift monitoring like insurance for your AI—but better. Rather than paying for repairs after disaster strikes, you get live alerts and preventive care, ensuring your AI always aligns with business realities. In competitive industries, this is a game-changer.

Real-World Example

Consider a major online retailer. Their recommendation engine once boosted sales, but over time, conversion rates mysteriously dropped. Only after weeks of lost revenue did the team discover the issue: new product categories were introduced, but the model hadn’t adapted.

Lesson learned: Without drift monitoring, even the best AI can become a liability overnight.

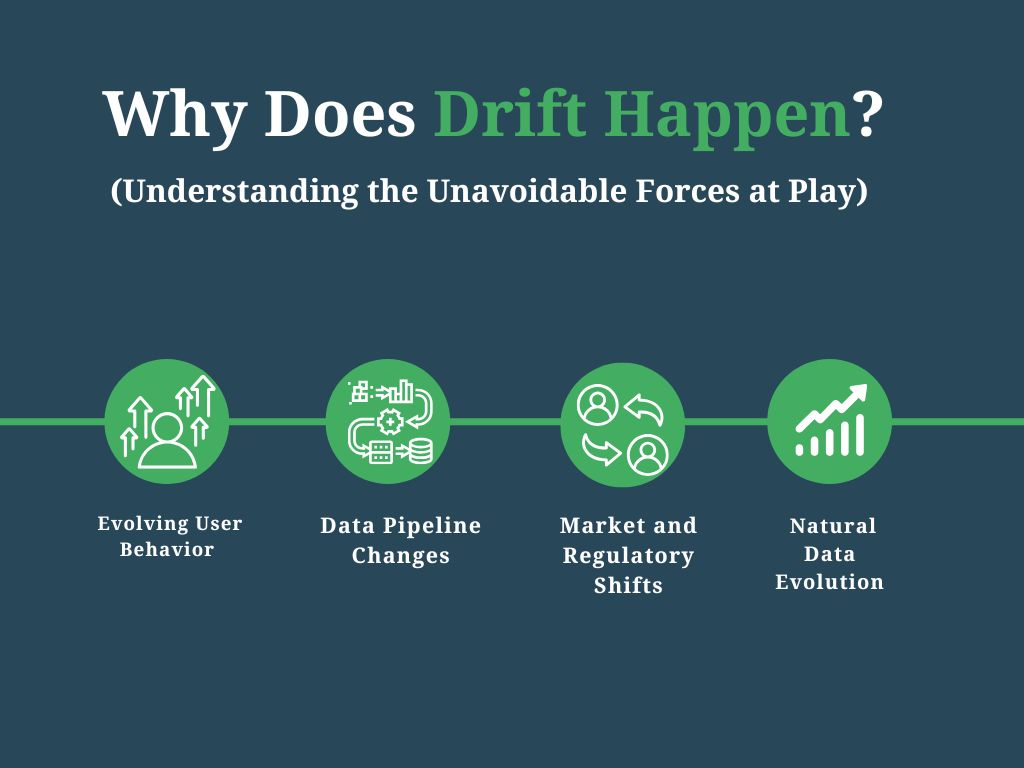

Why Does Drift Happen? (Understanding the Unavoidable Forces at Play)

No matter how good your model, data drift is inevitable. Here’s why:

1. Evolving User Behavior

Customers aren’t static. Their preferences, buying habits, and even the platforms they use change with trends, seasons, or global events.

Example: During COVID-19, home workout equipment sales skyrocketed, but only retailers monitoring this shift in data reaped the benefits.

2. Data Pipeline Changes (Database Drift)

Behind the scenes, data engineers are constantly updating systems—adding new fields, migrating databases, or changing APIs.

Database drift occurs when these changes disrupt the structure or quality of input data. The model may receive missing, swapped, or malformed features, leading to unexpected predictions.

3. Market and Regulatory Shifts

Regulatory updates (GDPR, CCPA) might force you to drop certain data fields or anonymize sensitive information, causing sudden feature drift or even concept drift if your model’s logic depends on those fields.

4. Natural Data Evolution

Populations age, new products launch, competitors adjust their strategies. Each change is reflected in your incoming data and ultimately in your AI’s outputs.

The Takeaway

Drift isn’t a sign your AI team failed. It’s a sign your business and environment are moving forward. Drift monitoring is how you keep up.

The Five Major Types of Drift (And How to Detect Each)

A robust monitoring system looks for all five kinds of drift. Here’s what to watch for:

1. Data Drift (Input Drift)

What it is:

A shift in the statistical distribution of your model’s input features, compared to training data.

Why it matters:

The model was built on one reality; production is showing another. Even if your model’s accuracy appears stable, its predictions may become less relevant.

How to detect:

- Monitor feature distributions (means, medians, ranges).

- Use statistical tests like Kolmogorov-Smirnov or Population Stability Index.

- Set up visual dashboards to see sudden or gradual changes.

Deep Dive Example:

A food delivery app sees a jump in orders from a new neighborhood after marketing campaigns. If the model isn’t tracking this drift, its delivery-time estimates become unreliable for new users.

2. Concept Drift

What it is:

The relationship between input features and the output variable changes.

Why it matters:

Your model’s logic is now out of sync with reality. Old correlations no longer hold.

How to detect:

- Track performance metrics (accuracy, precision, recall) over time and by segment.

- Use sliding window validation to compare recent predictions with historical data.

Deep Dive Example:

A credit scoring model may see concept drift if economic downturns change how employment history predicts loan defaults.

3. Feature Drift

What it is:

Individual features become more or less predictive, or their distributions change within certain user segments.

Why it matters:

Even if overall data looks stable, key features shifting can undermine performance, especially in complex models.

How to detect:

- Monitor feature importances over time (e.g., SHAP values, permutation importance).

- Track feature statistics by user segment.

Deep Dive Example:

If more users sign in with Apple ID instead of Google, authentication methods as a feature will drift—potentially affecting fraud models.

4. Target Drift

What it is:

The distribution of the output (label) your model predicts changes.

Why it matters:

If the business environment shifts, the same input could lead to different “correct” outputs.

How to detect:

- Visualize and monitor label proportions over time.

- Set up alerts for sudden spikes in certain classes.

Deep Dive Example:

After a company-wide price increase, churn rates jump. Models not tracking target drift may underpredict cancellations.

5. Prediction Drift (Model Drift)

What it is:

The output distribution of your model changes—even if input and logic look stable.

Why it matters:

This could mean data quality issues, pipeline bugs, or emerging model bias.

How to detect:

- Monitor prediction probabilities, output classes, and score distributions.

- Compare current outputs to established baselines.

Deep Dive Example:

A chatbot’s answers slowly become less varied, repeating the same solutions for all users. Prediction drift signals deeper problems.

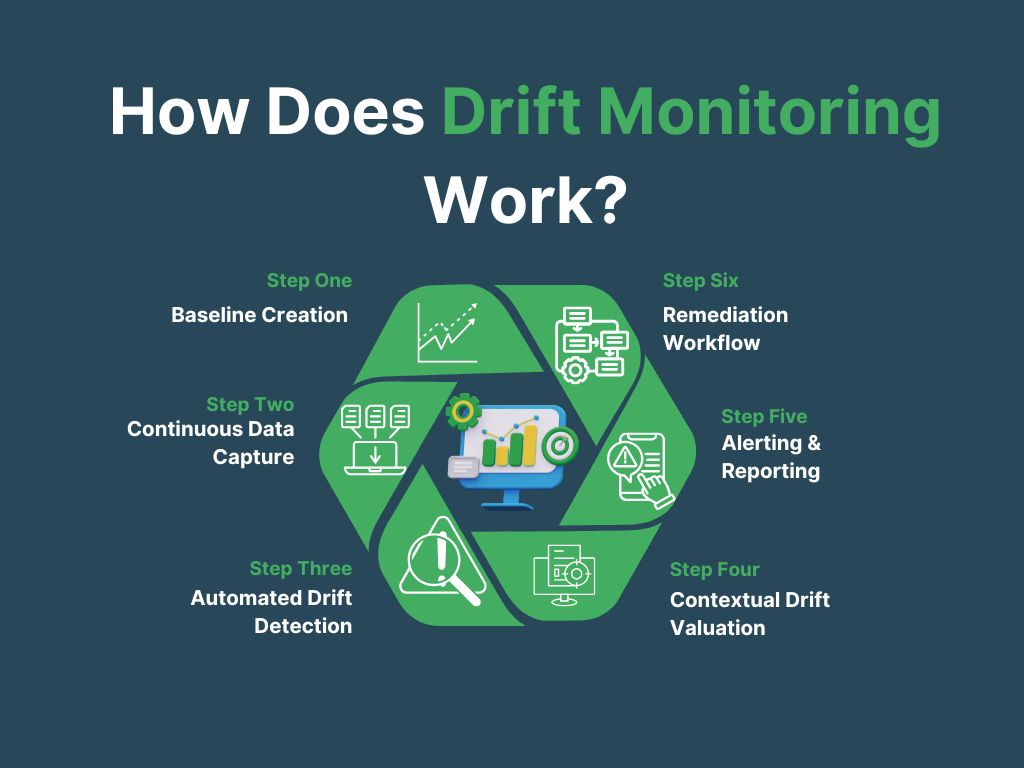

How Does Drift Monitoring Work?

Step 1: Baseline Creation

- Analyze your historical training data and set reference distributions for every key feature, target, and output.

- Document these baselines for future audits.

Step 2: Continuous Data Capture

- Log every prediction, input, and outcome as your model runs in production.

- Build infrastructure for scalable, secure storage and fast retrieval.

Step 3: Automated Drift Detection

- Schedule periodic (or real-time) checks comparing live data to baselines.

- Use a combination of statistical tests, domain thresholds, and business rules.

Step 4: Contextual Drift Valuation

- Not every drift matters equally. Estimate potential business, financial, or reputational impact for each detected event.

- Assign severity levels and escalate accordingly.

Step 5: Alerting & Reporting

- Set up clear, actionable alerts for significant drift.

- Provide dashboards for both technical and business teams, with drill-downs for root cause analysis.

Step 6: Remediation Workflow

- Retrain, recalibrate, or replace your model as needed.

- Document every intervention and result for future learning.

Pro Tip: Make drift reporting part of every model’s “runbook”—so anyone can respond quickly, not just the original developer.

Where Drift Monitoring Is Essential: High-Impact Use Cases

E-commerce & Retail

Situation: Launch of new products, seasonal promotions, or sudden social trends.

Without drift monitoring:

Missed upsell opportunities, stockouts, and wasted ad spend.

With drift monitoring:

Automated detection of new trends, adaptive recommendations, and efficient inventory planning.

Finance & Banking

Situation: Rapid changes in market risk, new fraud patterns, or regulatory updates.

Without drift monitoring:

Regulatory fines, increased fraud losses, and customer churn.

With drift monitoring:

Real-time fraud pattern detection, agile risk models, and compliance confidence.

Healthcare

Situation: New treatments, changing disease rates, and evolving patient demographics.

Without drift monitoring:

Outdated diagnostic models, patient safety risks, and loss of trust.

With drift monitoring:

Safer, more accurate predictions—and a clear audit trail for clinical review.

Marketing & Ad Tech

Situation: Audience fatigue, creative wear-out, and platform algorithm changes.

Without drift monitoring:

Ineffective campaigns and wasted budget.

With drift monitoring:

Real-time campaign optimization and early detection of creative fatigue.

The Best Tools for Drift Monitoring: What to Choose and Why

Evidently AI

- Overview: Python-based, open-source, designed for ease of use and powerful visualization.

- Strengths: Customizable reports, intuitive dashboards, rapid integration with Jupyter notebooks or ML pipelines.

- Ideal for: Data science teams looking for flexibility and transparency.

Alibi Detect

- Overview: Strong statistical and ML-driven drift and outlier detection, supports tabular, text, image data.

- Strengths: Advanced algorithms, easy integration with ML stacks, research-friendly.

- Ideal for: Teams needing cutting-edge detection or support for complex data types.

Azure, AWS, GCP MLOps

- Overview: Enterprise-grade, managed services with end-to-end monitoring, retraining, and reporting.

- Strengths: Scale, compliance, seamless integration with deployment and CI/CD.

- Ideal for: Companies running critical models at scale, or requiring strict governance.

Expert Insight:

Start small, implement open-source tools to learn what matters most, then invest in managed platforms as your needs and models scale.

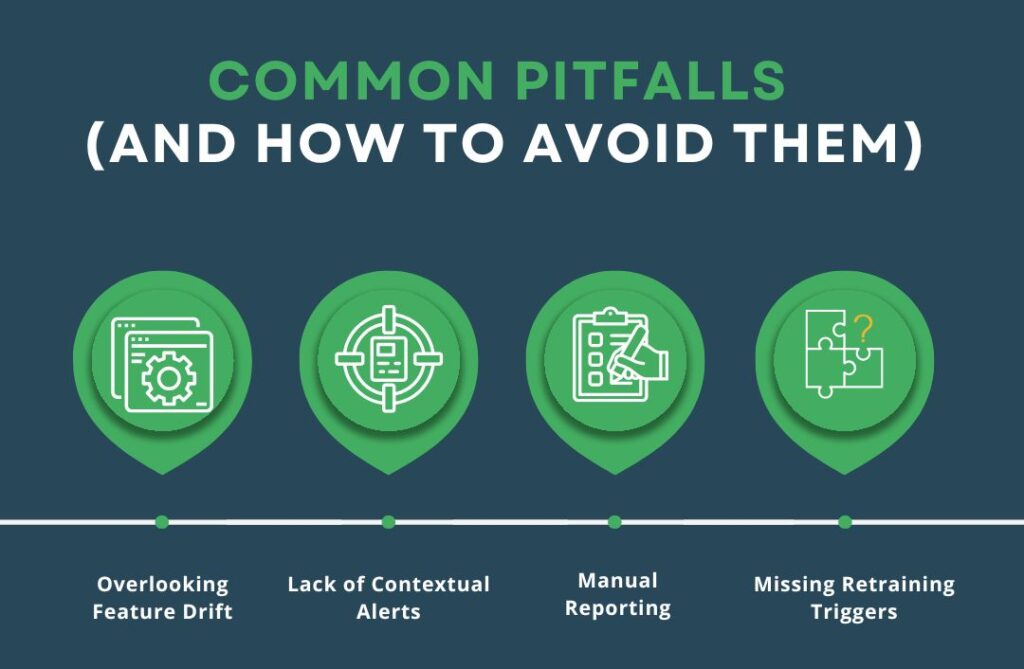

Common Pitfalls (And How to Avoid Them)

- Overlooking Feature Drift: Don’t just track overall accuracy—watch individual features for subtle changes.

- Lack of Contextual Alerts: Too many false positives? Tie alerts to business metrics, not just raw numbers.

- Manual Reporting: Automate as much as possible to avoid human error and reduce response time.

- Missing Retraining Triggers: Drift monitoring is only as good as your plan to respond—define clear thresholds for action.

Case Study

A global SaaS company saw unexpected churn despite new feature launches. By implementing detailed drift monitoring, they discovered their ML retention model was still “optimizing” for pre-pandemic behavior. The pandemic changed how and why users left. With new drift reports and targeted retraining, the company slashed churn by 30%—and used lessons learned to proactively monitor all future models.

Conclusion:

Drift monitoring is more than a technical tool, it’s a business necessity. The world changes. Your AI must too.

By investing in a robust drift monitoring process, you protect your business from silent failures, seize new opportunities, and ensure your machine learning delivers results for the long run.

Don’t wait for a crisis. Make drift monitoring your AI’s competitive edge with Symufolk.

FAQs: Advanced Drift Monitoring

1. How do I decide what “counts” as actionable drift?

The key is collaboration. Work closely with business leaders, compliance officers, and data engineers to determine which kinds of drift directly impact core business goals, customer experience, or regulatory requirements. Actionable drift usually means:

- A change that leads to noticeable performance drops in business KPIs (revenue, conversion, churn)

- Drift that creates compliance risks or violates fairness and transparency standards

- Shifts that signal emerging risks or opportunities in the market

Set thresholds for action based on both statistical significance and business value. Regularly review and adjust these thresholds as your business, model, and environment evolve.

2. Is drift always negative?

No, and this is a powerful insight. While many teams focus only on drift as a warning, smart organizations see drift as an opportunity for discovery. Sometimes drift means you’ve tapped into a new customer segment, identified a trend before your competitors, or found ways to better personalize services. The best teams use drift as both a defensive alert and a proactive tool to innovate and grow.

3. Can I use drift monitoring for unstructured data (text, images)?

Absolutely. Modern drift detector tools—like Alibi Detect and others—let you monitor drift in high-dimensional vector spaces (such as embeddings from NLP or vision models).

For text data, track changes in token or embedding distributions; for images, monitor feature vectors or CNN outputs. This enables drift detection in deep learning models, chatbot logs, social media analysis, and more.

4. What’s the cost of ignoring drift?

Ignoring drift can have cascading effects:

- Financial loss: Declining model performance quietly erodes profits or increases operational costs.

- Compliance and legal risk: Unmonitored drift may result in regulatory breaches, especially in finance or healthcare.

- Reputation damage: Users and stakeholders lose trust in AI solutions that make repeated mistakes.

- Technical debt: The longer issues persist, the more complex and expensive they become to resolve—sometimes requiring full model rebuilds.

Proactive drift monitoring is almost always less expensive than fixing the fallout from unmonitored change.

5. How do I communicate drift findings to business stakeholders?

Keep it clear and business-focused:

- Use simple visualizations—trend lines, dashboards, before/after comparisons

- Relate drift to business outcomes: “This drift could lead to X% lower sales or Y% increase in customer support cases.”

- Summarize with action items: “We recommend retraining the model next week to restore performance.”

- Share positive drift too: “This new segment presents an opportunity for tailored marketing.”

Effective communication ensures drift monitoring drives real action, not just data noise.

6. What steps should I take when significant drift is detected?

- Validate the finding: Rule out data errors or false positives.

- Assess business impact: How critical is the change? Does it impact core KPIs or compliance?

- Notify stakeholders: Alert relevant teams—tech, business, compliance.

- Plan response: Decide whether to retrain the model, adjust data pipelines, or further investigate.

- Document and review: Record the drift event, action taken, and business results for continuous improvement.

7. How frequently should I update my drift monitoring strategy?

As often as your data or business changes! Schedule quarterly reviews at a minimum, and after any major system or product update. Periodically reassess thresholds, metrics, and workflows to keep pace with your evolving environment.

8. Can drift monitoring support A/B testing or model comparisons?

Yes! Drift monitoring tools are valuable in A/B tests and shadow deployments. Compare distributions and performance between model variants to choose the most robust option for your business.