Your machine learning model might be working perfectly—until it isn’t.

You trained it on clean data, validated the metrics, and deployed it confidently. But suddenly, the predictions are off. Conversions drop. Behaviors shift. And the model doesn’t perform as expected anymore.

This silent performance decline is often not a bug. It’s concept drift—one of the most critical and overlooked issues in machine learning.

In this blog, you’ll learn what concept drift is, how it’s different from data drift and model drift, and what you can do to detect and handle it in real time.

What Is Concept Drift?

Concept drift (also called conceptual drift) happens when the relationship between input data and the target variable changes over time. In simpler terms, the rules your machine learning model learned during training are no longer valid.

This drift means that even though the model receives the same type of data as during training, the outcomes it predicts become less accurate over time.

Why Does It Matter?

Because if the concept changes but the model doesn’t adapt, predictions become unreliable, leading to poor business outcomes. For instance:

- A model trained to predict credit risk may become outdated due to shifts in economic behavior.

- In healthcare, treatment recommendations can become irrelevant if disease patterns evolve.

Ignoring concept drift means allowing your model to fail while everything appears to be working technically silently.

Concept Drift vs Data Drift vs Model Drift

These three terms, data drift, model drift, and concept drift in machine learning—are often confused, yet each represents a distinct cause of model degradation that requires specific strategies.

| Term | Definition | Example |

| Concept Drift | The relationship between inputs (X) and output (Y) changes. | Buying patterns change during a recession. |

| Data Drift | The statistical distribution of input data changes. | Input data shifts from desktop users to mobile users. |

| Model Drift | The performance of the model degrades over time. | Decrease in prediction accuracy over months. |

Why This Distinction Matters

Understanding whether you’re facing data drift or concept drift helps determine the right solution. Fixing model drift means addressing the root cause—either updating the data or adjusting to a new concept.

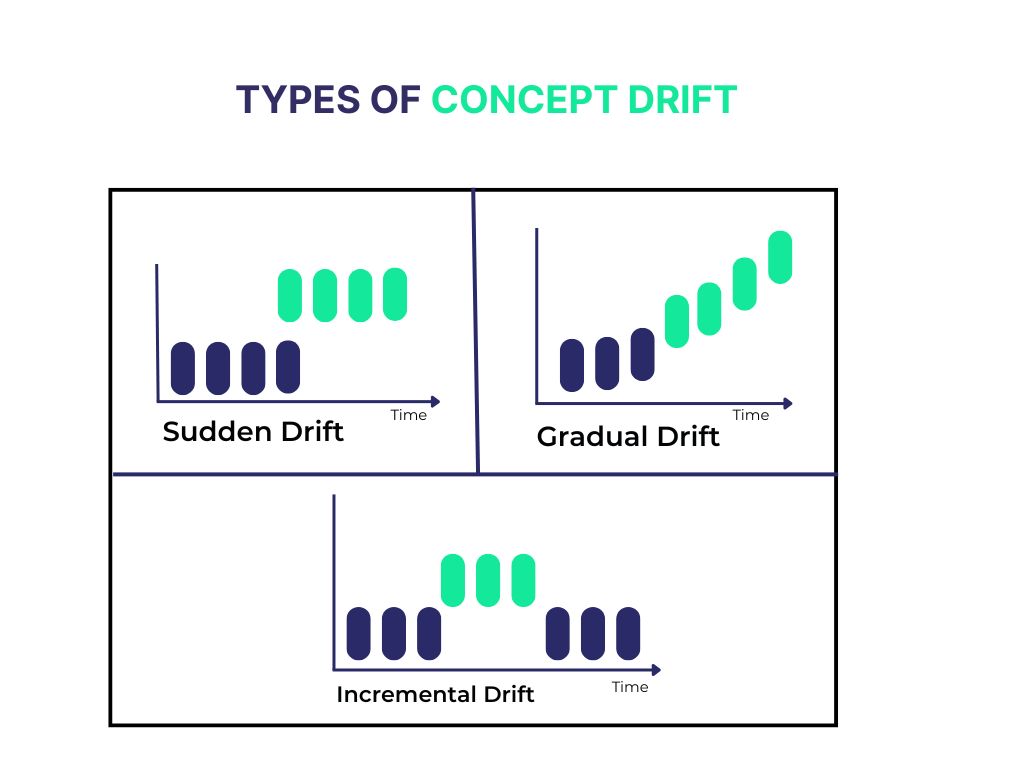

Types of Concept Drift

To effectively monitor and mitigate concept drift, you need to understand its forms. There are four major types:

1. Sudden Drift

This happens abruptly and drastically. One day the data behaves a certain way, and the next, it changes entirely.

- Example: A sudden regulation change leads to completely new consumer behavior.

2. Gradual Drift

Concepts change slowly over time. The model may perform well for a while, then gradually decline.

- Example: Customer interests evolve over several months, like shifting from winter to summer products.

3. Incremental Drift

Small, almost unnoticeable changes accumulate until the overall concept becomes different.

- Example: Slowly changing language patterns in social media sentiment analysis.

4. Recurring or Seasonal Drift

Concepts disappear and return periodically.

- Example: E-commerce buying behavior during Black Friday each year.

These types are especially relevant when monitoring drift in time across real-time data streams.

Real-World Concept Drift Examples

Let’s break down how ml concept drift impacts various industries:

E-commerce

A product recommendation system trained on last year’s customer data may suggest irrelevant products this year due to new trends, seasonal demand, or promotional changes.

Finance

Fraudsters constantly evolve their strategies. If a model doesn’t learn these new fraud tactics, it may allow fraudulent transactions to slip through.

Healthcare

Disease profiles and treatment effectiveness can shift. A model trained on pre-pandemic data may not perform well post-pandemic.

Climate Data

Climate prediction models need constant updates due to long-term changes and real-time anomalies in environmental data.

These examples highlight how important it is to detect and respond to concept drift in machine learning before it leads to flawed decisions.

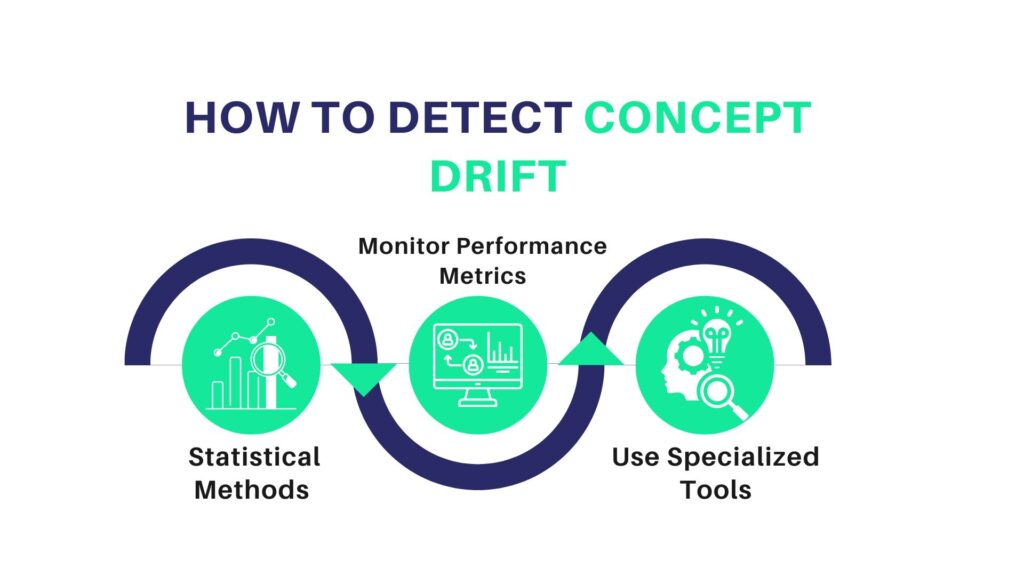

How to Detect Concept Drift

Detecting drift involves recognizing that the model is losing accuracy over time. Here are the key strategies:

1. Statistical Methods

These techniques use statistical signals to detect distribution shifts:

- DDM (Drift Detection Method): Monitors changes in error rate.

- EDDM (Early Drift Detection Method): Better for gradual drift detection.

- Page-Hinkley Test: Detects shifts in the average of a signal.

- ADWIN (Adaptive Windowing): Dynamically adjusts window size to detect change.

2. Monitor Performance Metrics

Tracking metrics like accuracy, F1 score, recall, and precision over time will reveal if your model is underperforming. A significant drop suggests a drift.

3. Use Specialized Tools

- Evidently AI: Offers open-source dashboards for tracking data drift in ML and model performance.

- Alibi Detect: Includes outlier, adversarial, and drift detectors.

- River: Designed for real-time streaming data monitoring.

In streaming environments, concept drift detection for streaming data is crucial for maintaining prediction quality over time.

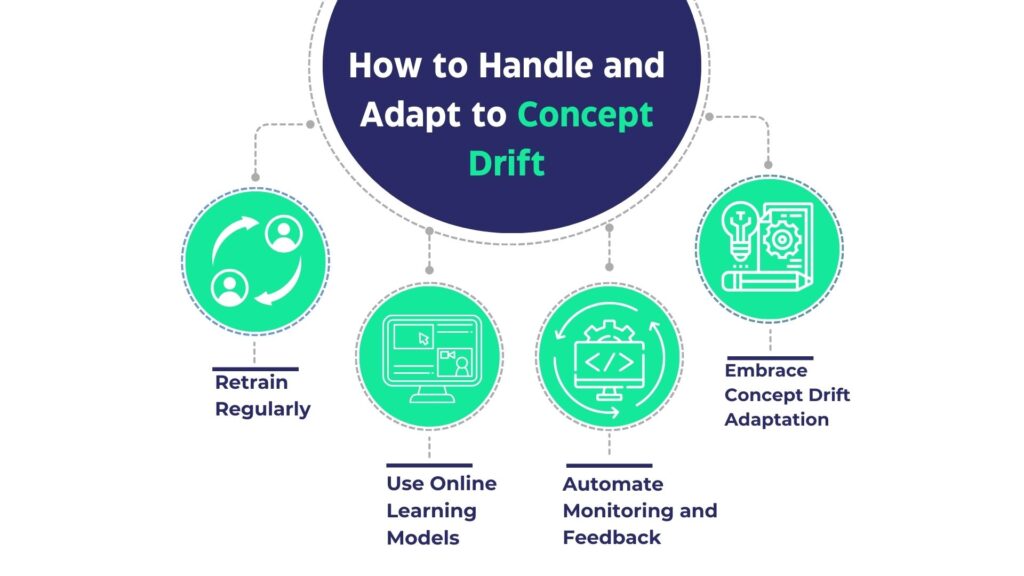

How to Handle and Adapt to Concept Drift

Once drift is detected, here are practical ways to address it:

1. Retrain Regularly

Schedule model retraining using the most recent data. Make use of concept drift datasets that reflect updated real-world behavior.

2. Use Online Learning Models

Online learning models update continuously as new data arrives. This is essential for applications like stock trading or live recommendation engines.

3. Automate Monitoring and Feedback

Set up automated pipelines to:

- Detect drift

- Trigger model retraining

- Retrain using current data

Include a human-in-the-loop system for decision-critical models like medical diagnosis or legal classification.

4. Embrace Concept Drift Adaptation

Adaptation techniques may include:

- Ensemble models that combine predictions from different time periods.

- Windowing techniques to weigh recent data more heavily.

- Active learning where the model queries for the most useful data points to learn from.

These strategies ensure your machine learning model drift doesn’t silently erode business value.

Best Practices to Prevent Model Failure

Avoiding ai model drift requires proactive strategies:

- Version control everything — data, models, and pipelines.

- Build monitoring dashboards using tools like Prometheus and Grafana.

- Set up alert systems when performance dips below acceptable thresholds.

- Engage multiple teams (data scientists, MLOps, domain experts) for holistic monitoring.

These preventive steps can save you from costly silent model failures.

Final Thoughts

Concept drift isn’t just a technical issue. It’s a signal that your machine learning model is no longer in sync with the world it was built to predict.

Ignoring it can lead to missed opportunities, flawed business decisions, and eroded trust in AI systems.

Whether you’re in fintech, retail, healthcare, or climate research, understanding and adapting to concept drift in ML is essential for staying competitive.

Need help detecting or handling concept drift? Symufolk’s data and AI experts can help you build smarter, adaptive systems that evolve with your users. Contact us today to get expert assistance and optimize your machine learning models.

الأسئلة الشائعة

What causes concept drift?

Concept drift can be caused by changes in user behavior, economic shifts, new technology, or seasonal trends.

What is the difference between data drift and concept drift?

Data drift relates to input data changes. Concept drift relates to changes in how inputs relate to outputs.

How do I detect concept drift in machine learning?

Use statistical methods (like DDM or ADWIN), track performance metrics, or leverage open-source tools like Evidently AI and Alibi Detect.

Can AutoML tools handle concept drift?

Some AutoML tools offer basic drift handling, but custom monitoring and retraining strategies are often needed.

What are some concept drift adaptation techniques?

Online learning, adaptive windowing, retraining, and ensemble models are common ways to adapt to drift over time.